Why using HQ-YTVIS?

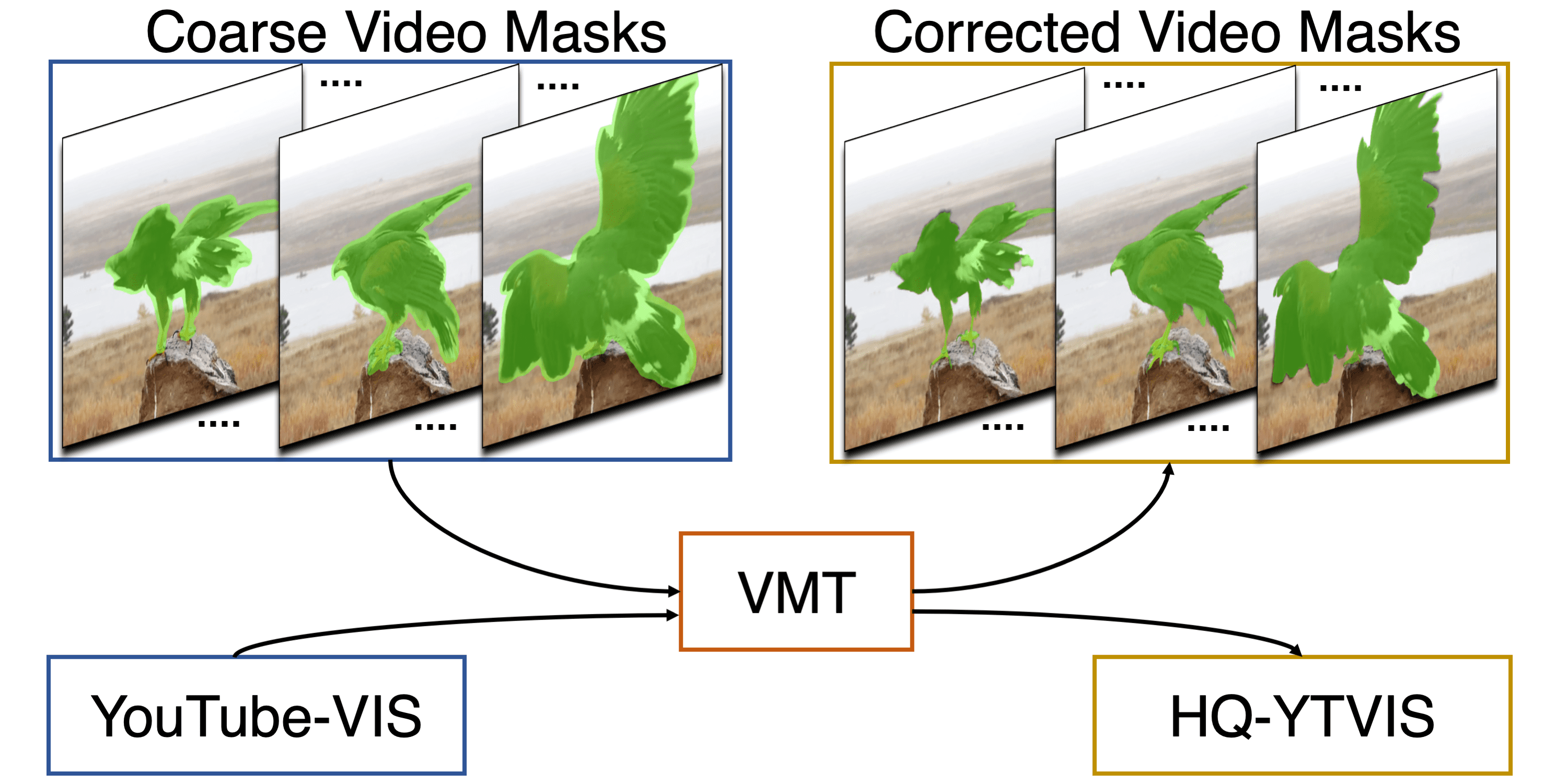

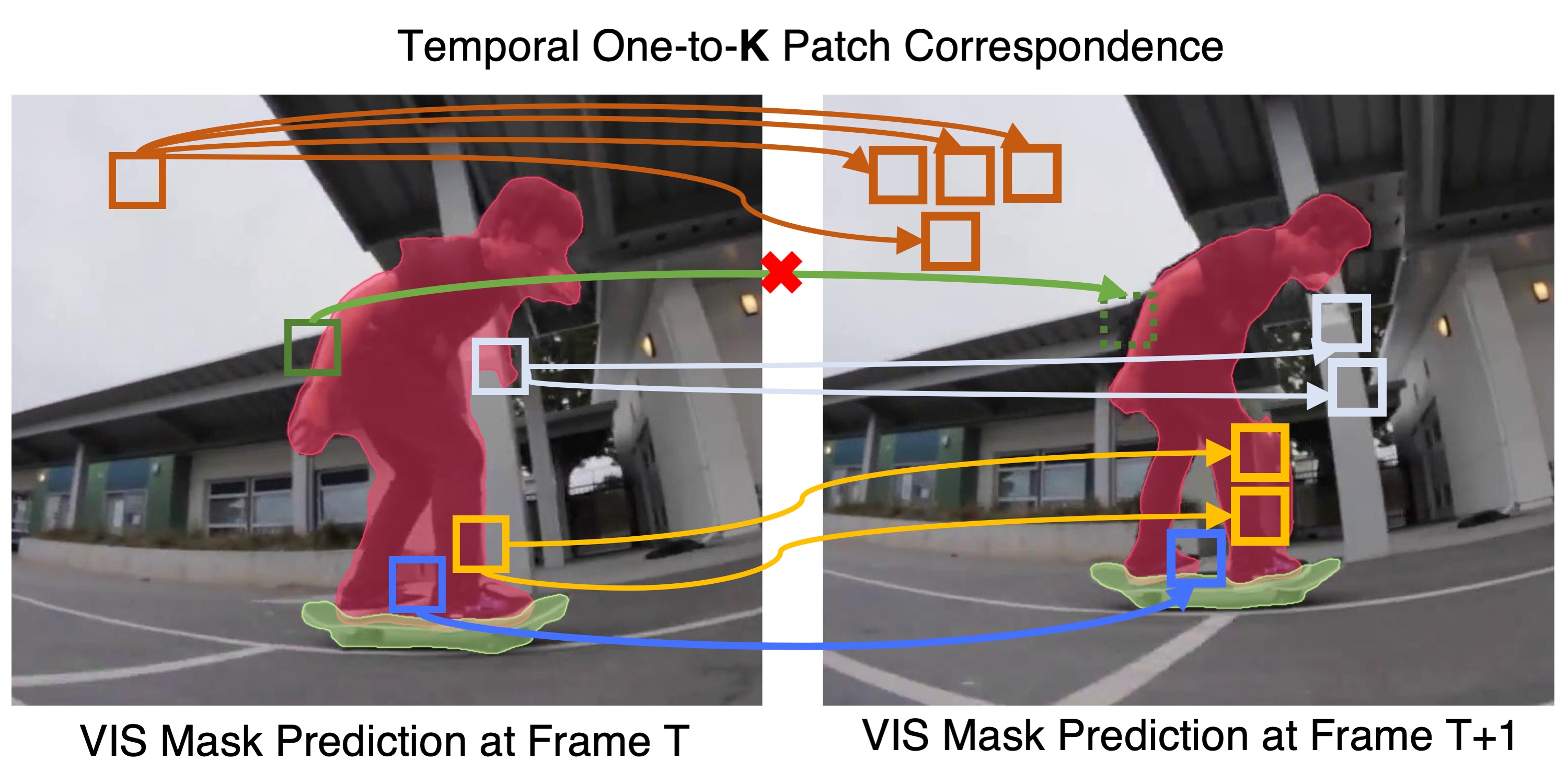

While Video Instance Segmentation (VIS) has seen rapid progress, current approaches struggle to predict high-quality masks with accurate boundary details. To tackle this issue, we identify that the coarse boundary annotations of the popular YouTube-VIS dataset constitute a major limiting factor.

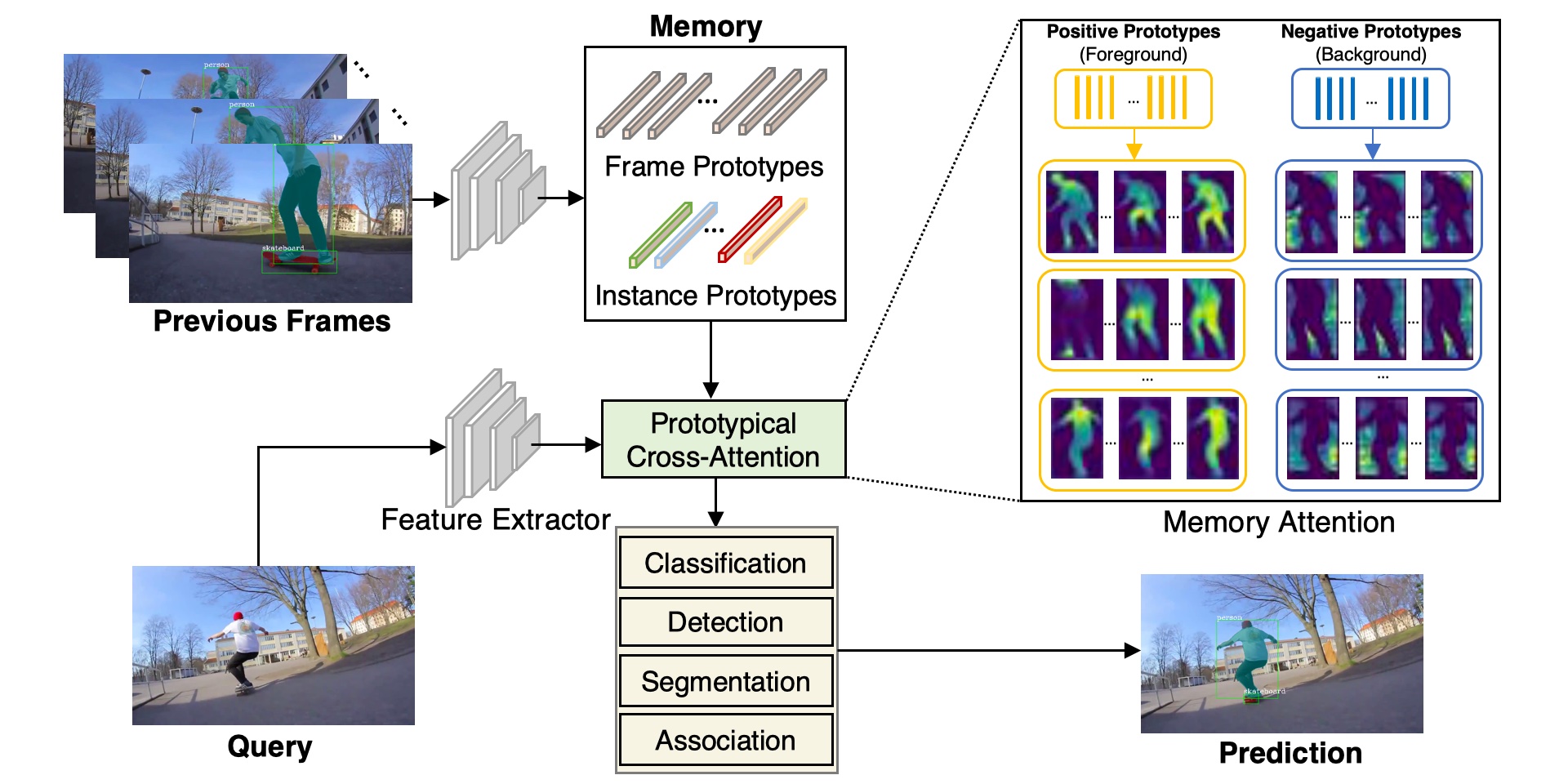

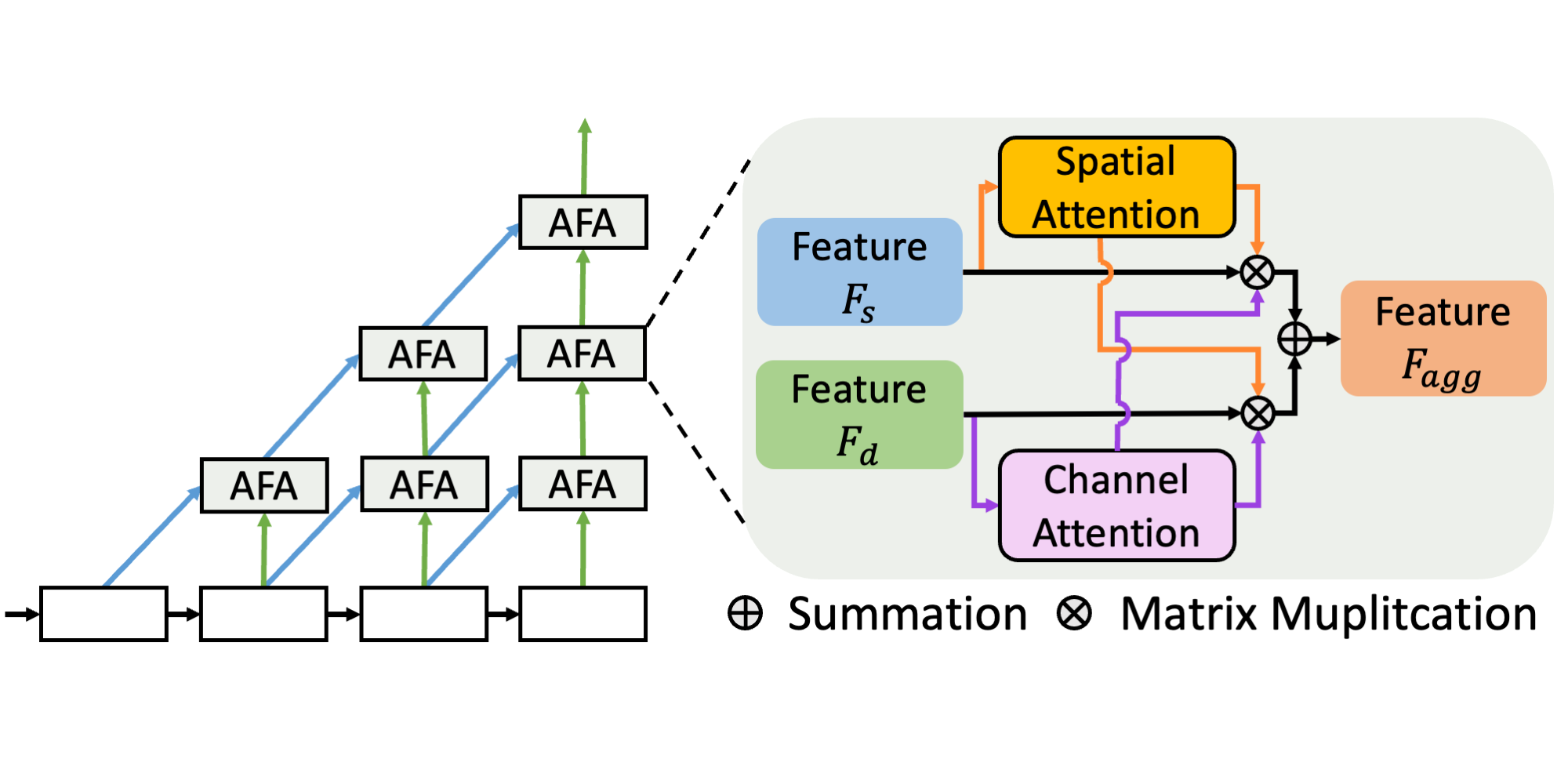

Based on our VMT architecture, we therefore design an automated annotation refinement approach by iterative training and self-correction. To benchmark high-quality mask predictions for VIS, we introduce the HQ-YTVIS dataset as well as Tube-Boundary AP in ECCV 2022. HQ-YTVIS consists of a manually re-annotated test set and our automatically refined training data, which provides training, validation and testing support to facilitate future development of VIS methods aiming at higher mask quality.

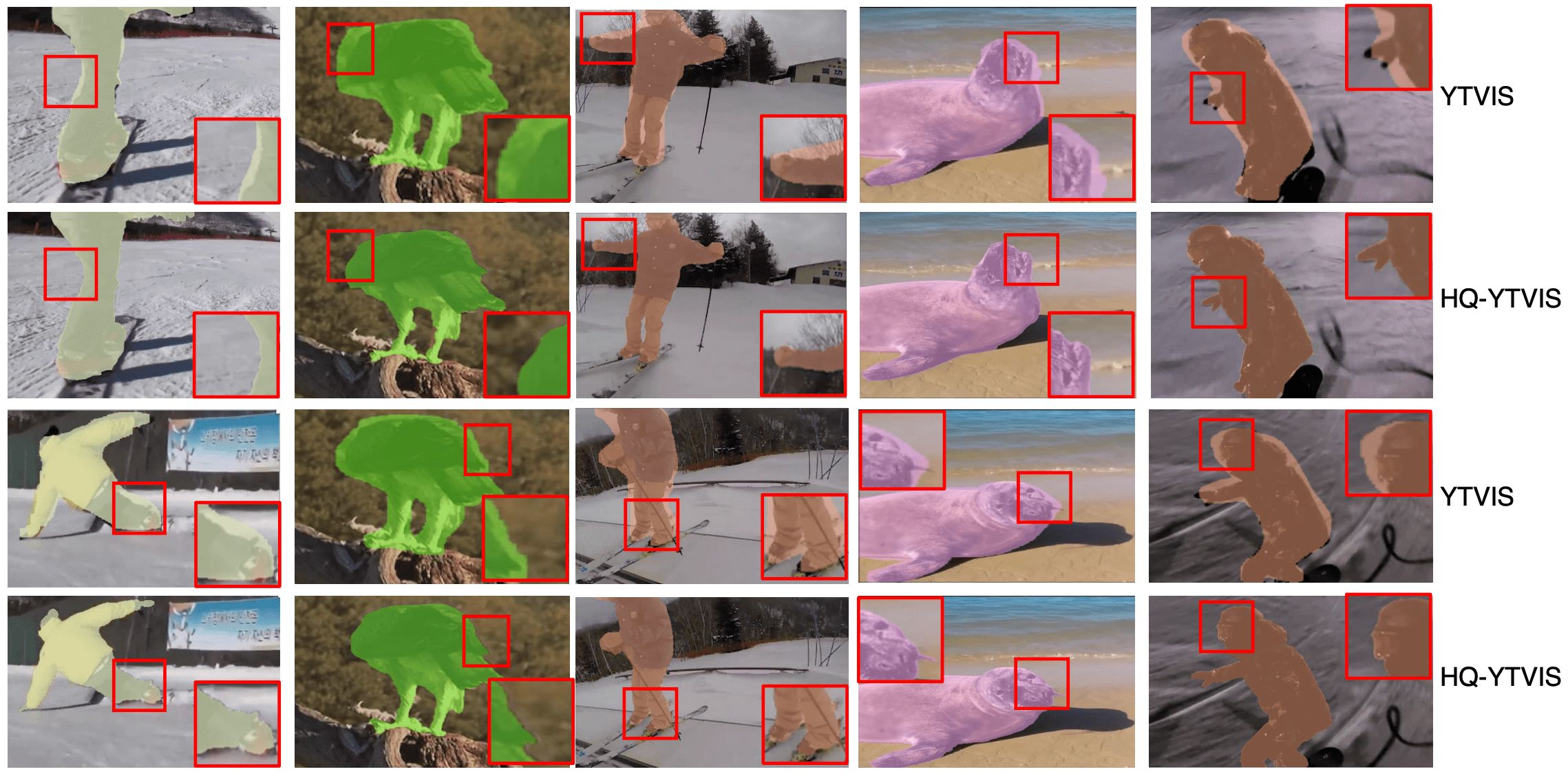

Annotation Comparison

We only highlight the instance mask for one object per video for easy comparison.

Dataset Description

For HQ-YTVIS, we split the original YTVIS training set (2238 videos) into a new train (1678 videos, 75%), val (280 videos 12.5%) and test (280 videos 12.5%) sets following the ratios in YTVIS. The masks annotations on the train subset of HQ-YTVIS is self-corrected by VMT through iterative training, while the smaller sets of val and test are carefully checked and relabeled by human annotators to ensure high mask boundary quality.

Tube-Mask AP vs. Tube-Boundary AP

We employ both the standard tube mask AP in YouTube-VIS and our proposed Tube-Boundary AP as evaluation metrics. Tube-Boundary AP not only focuses on the boundary quality of the objects, but also considers spatio-temporal consistency between the predicted and ground truth object masks.

Dataset Download

Paper

Please refer to our paper for more details of the HQ-YTVIS dataset.

| Lei Ke, Henghui Ding, Martin Danelljan, Yu-Wing Tai, Chi-Keung Tang, Fisher Yu Video Mask Transfiner for High-Quality Video Instance Segmentation ECCV 2022 |

Manual re-labeling on Val/Test Sets

Code

The code of data loading and our Tube-Boundary AP evaluation:

github.com/SysCV/vmt

License

HQ-YTVIS labels are provided under CC BY-SA 4.0 License. Please refer to YouTube-VOS terms for use of the videos.

Citation

@inproceedings{vmt,

title = {Video Mask Transfiner for High-Quality Video Instance Segmentation},

author = {Ke, Lei and Ding, Henghui and Danelljan, Martin and Tai, Yu-Wing and Tang, Chi-Keung and Yu, Fisher},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2022}

}

Contact

For questions and suggestions, please contact Lei Ke (keleiwhu at gmail.com).