Abstract

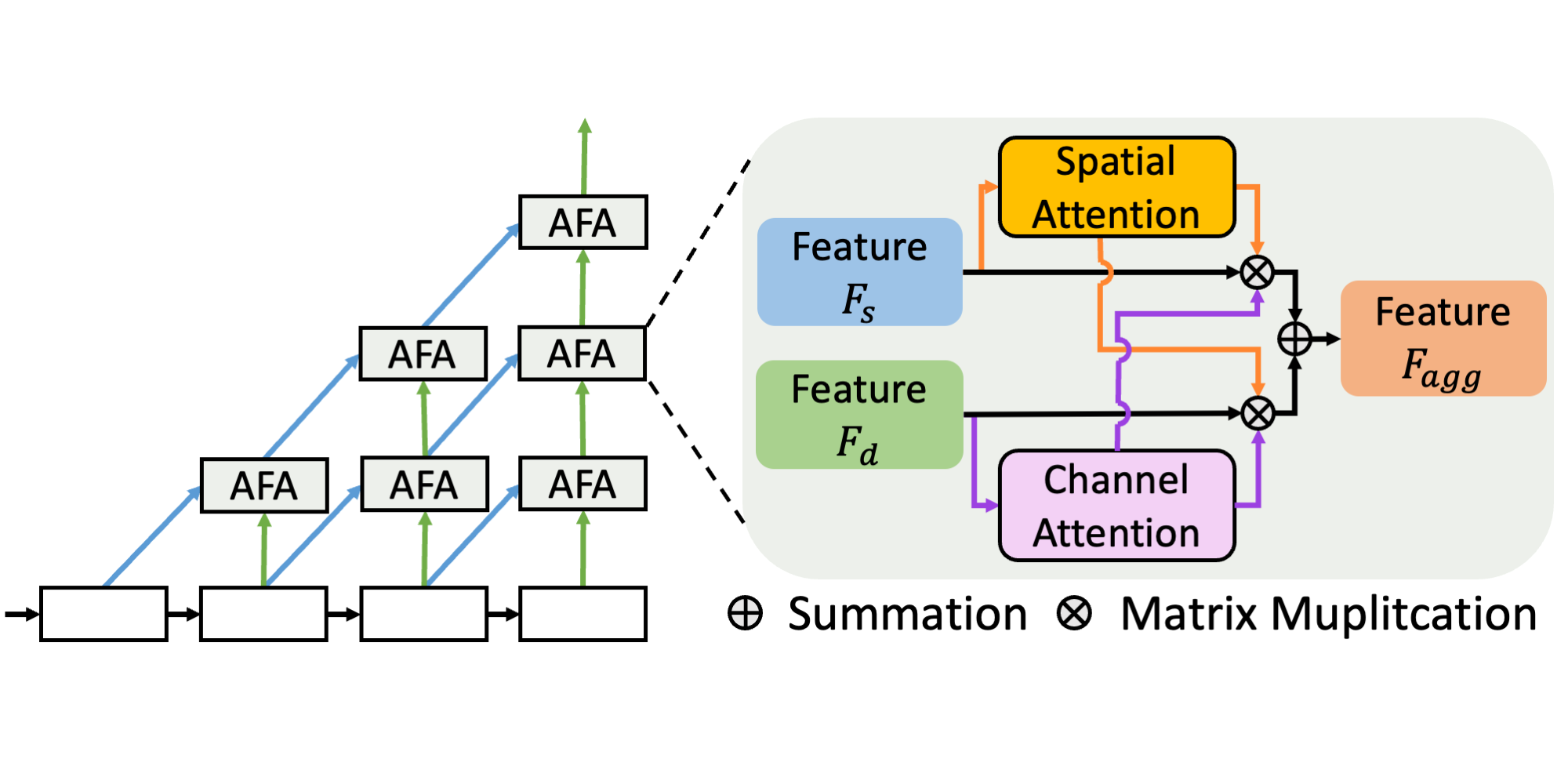

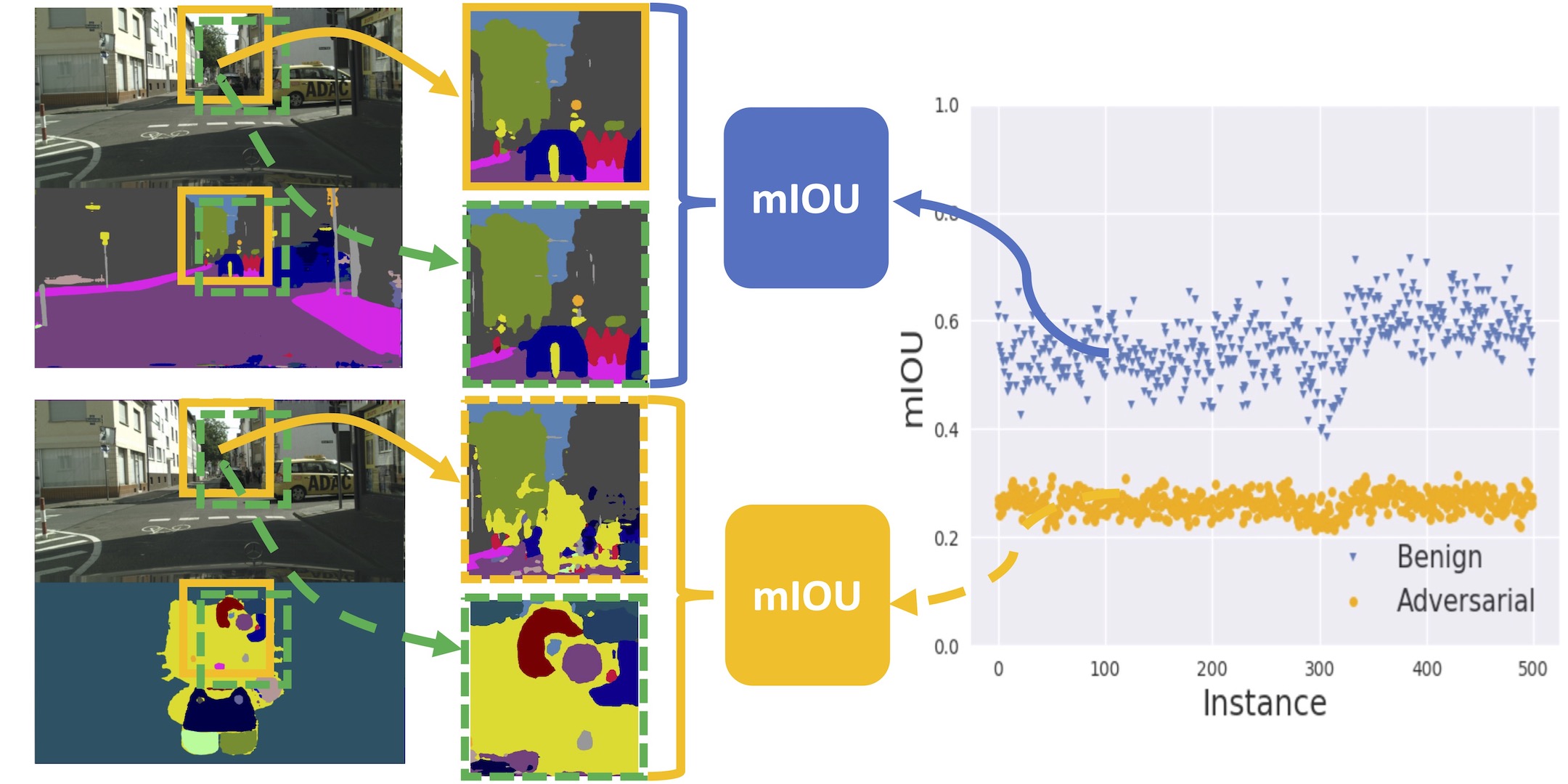

Aggregating information from features across different layers is essential for dense prediction models. Despite its limited expressiveness, vanilla feature concatenation dominates the choice of aggregation operations. In this paper, we introduce Attentive Feature Aggregation (AFA) to fuse different network layers with more expressive non-linear operations. AFA exploits both spatial and channel attention to compute weighted averages of the layer activations. Inspired by neural volume rendering, we further extend AFA with Scale-Space Rendering (SSR) to perform a late fusion of multi-scale predictions. AFA is applicable to a wide range of existing network designs. Our experiments show consistent and significant improvements on challenging semantic segmentation benchmarks, including Cityscapes and BDD100K at negligible computational and parameter overhead. In particular, AFA improves the performance of the Deep Layer Aggregation (DLA) model by nearly 6% mIoU on Cityscapes. Our experimental analyses show that AFA learns to progressively refine segmentation maps and improve boundary details, leading to new state-of-the-art results on boundary detection benchmarks on NYUDv2 and BSDS500.

Video Overview

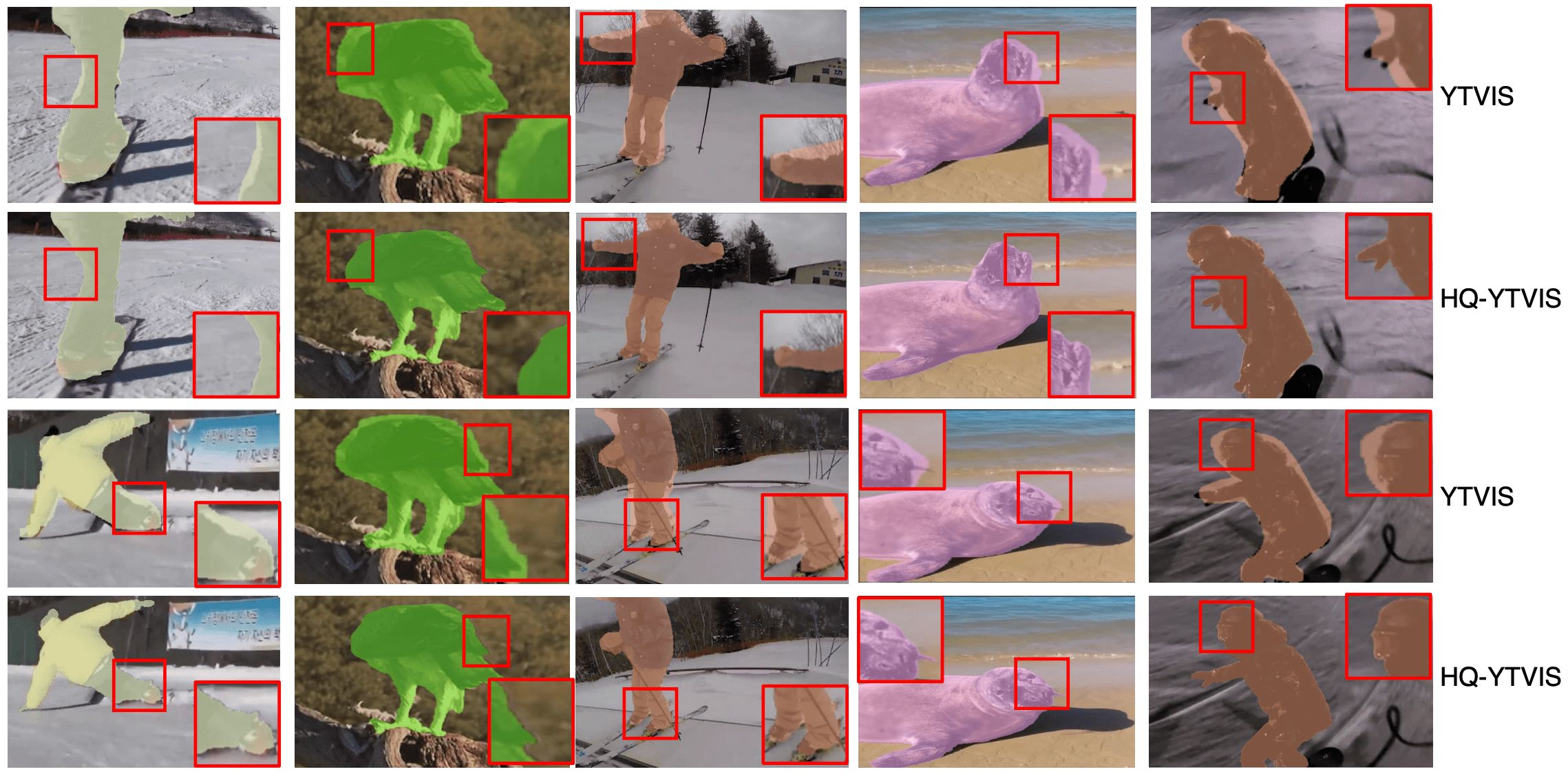

Qualitative Results

We show the semantic segmentation and boundary prediction results on the videos of Cityscapes, BDD100K, and NYUv2. The predictions are conducted on each individual image without considering the temporal context.

Cityscapes

Examples of running AFA-DLA-X-102 on Cityscapes for semantic segmentation.

BDD100K

Examples of running AFA-DLA-X-169 on BDD100K for semantic segmentation.

NYUDv2

Examples of running AFA-DLA-34 on NYUDv2 for boundary detection.

Paper

| Yung-Hsu Yang, Thomas E. Huang, Min Sun, Samuel Rota Bulò, Peter Kontschieder, Fisher Yu Dense Prediction with Attentive Feature Aggregation WACV 2023 |

Code

github.com/SysCV/dla-afa

Citation

@inproceedings{yang2023dense,

title={Dense prediction with attentive feature aggregation},

author={Yang, Yung-Hsu and Huang, Thomas E and Sun, Min and Bul{\`o}, Samuel Rota and Kontschieder, Peter and Yu, Fisher},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

pages={97--106},

year={2023}

}