Abstract

Visual recognition requires rich representations that span levels from low to high, scales from small to large, and resolutions from fine to coarse. Even with the depth of features in a convolutional network, a layer in isolation is not enough: compounding and aggregating these representations improves inference of what and where. Architectural efforts are exploring many dimensions for network backbones, designing deeper or wider architectures, but how to best aggregate layers and blocks across a network deserves further attention. Although skip connections have been incorporated to combine layers, these connections have been “shallow” themselves, and only fuse by simple, one-step operations. We augment standard architectures with deeper aggregation to better fuse information across layers. Our deep layer aggregation structures iteratively and hierarchically merge the feature hierarchy to make networks with better accuracy and fewer parameters. Experiments across architectures and tasks show that deep layer aggregation improves recognition and resolution compared to existing branching and merging schemes.

Poster

Click here to open high-res pdf poster.

Click here to open high-res pdf poster.

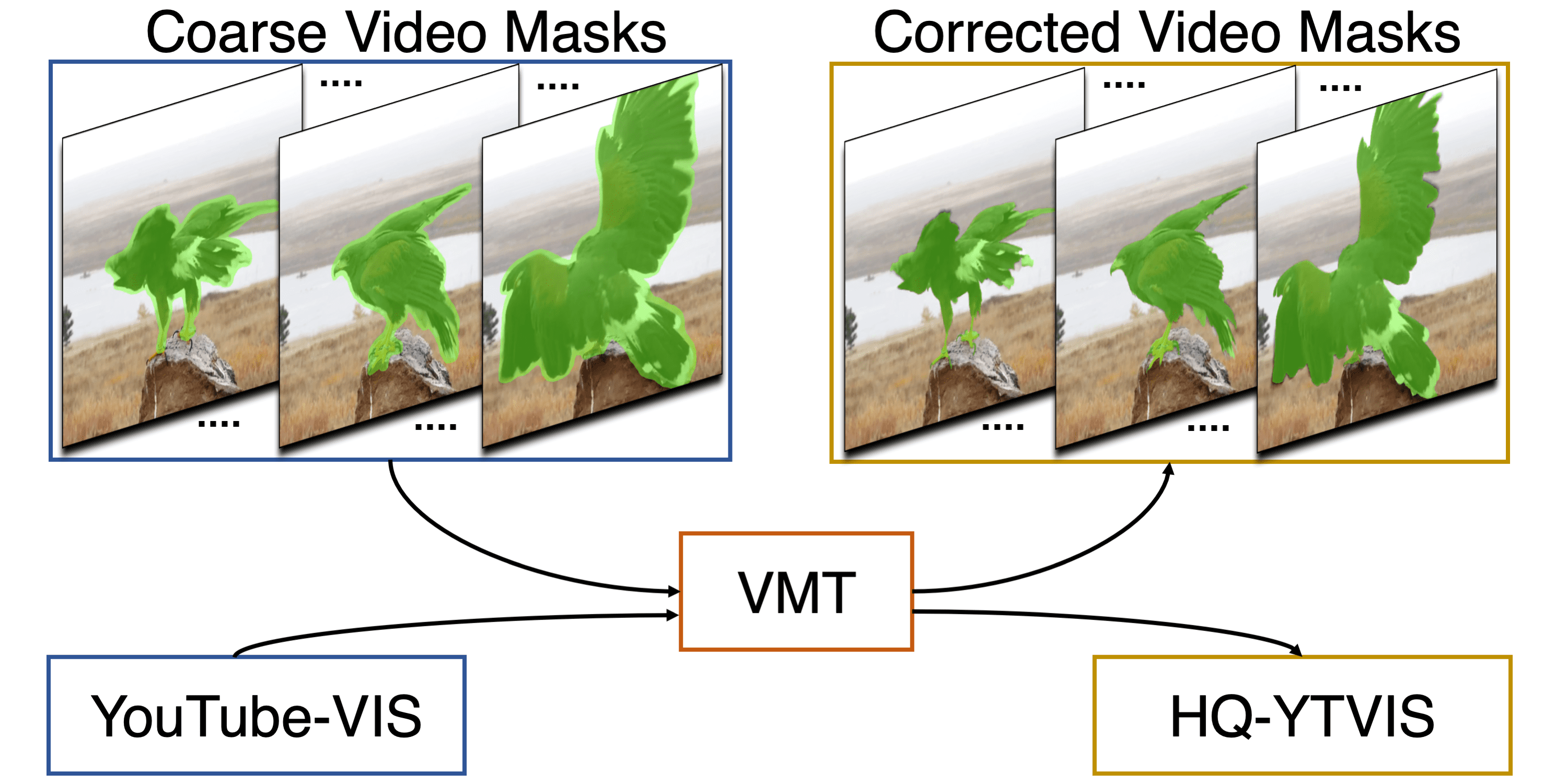

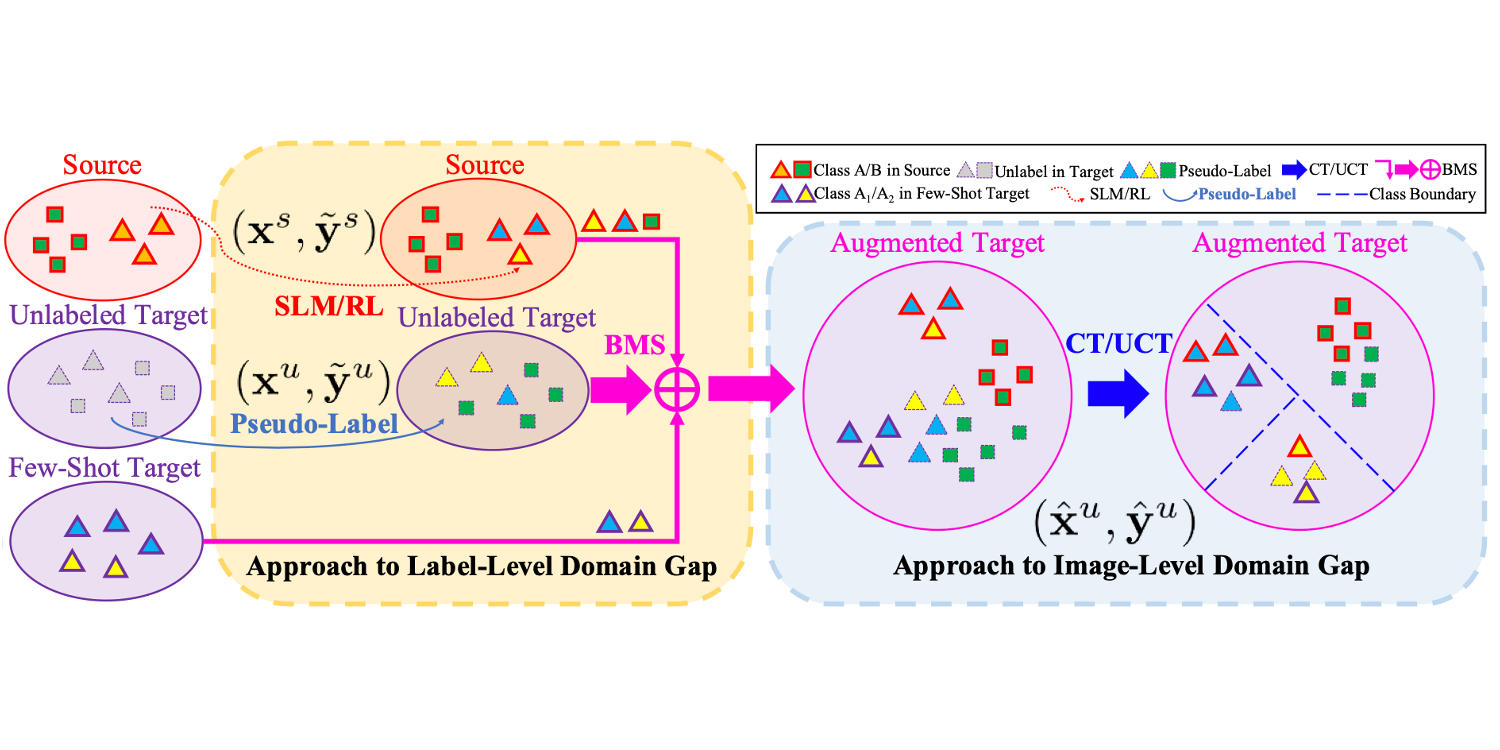

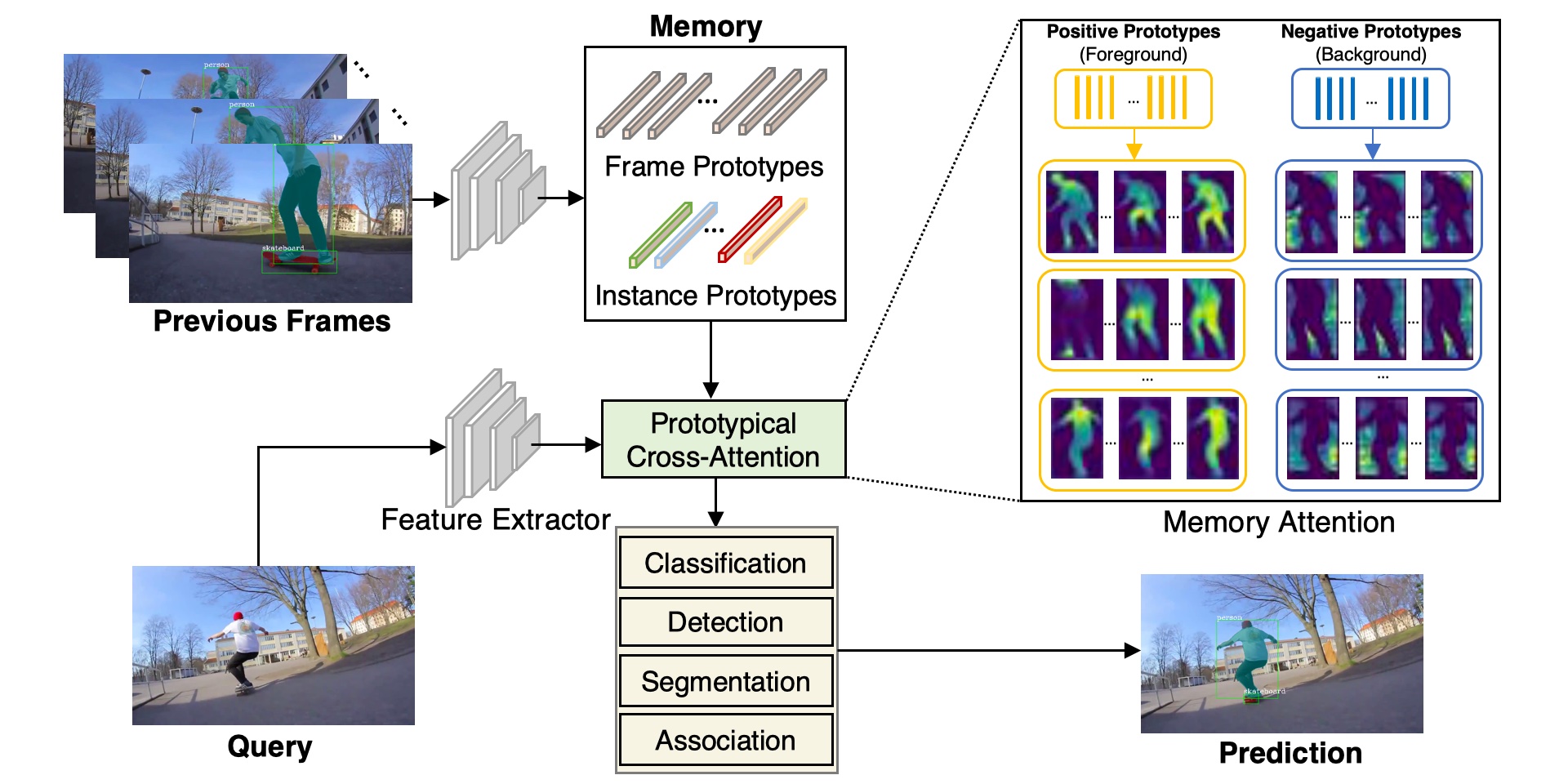

Method

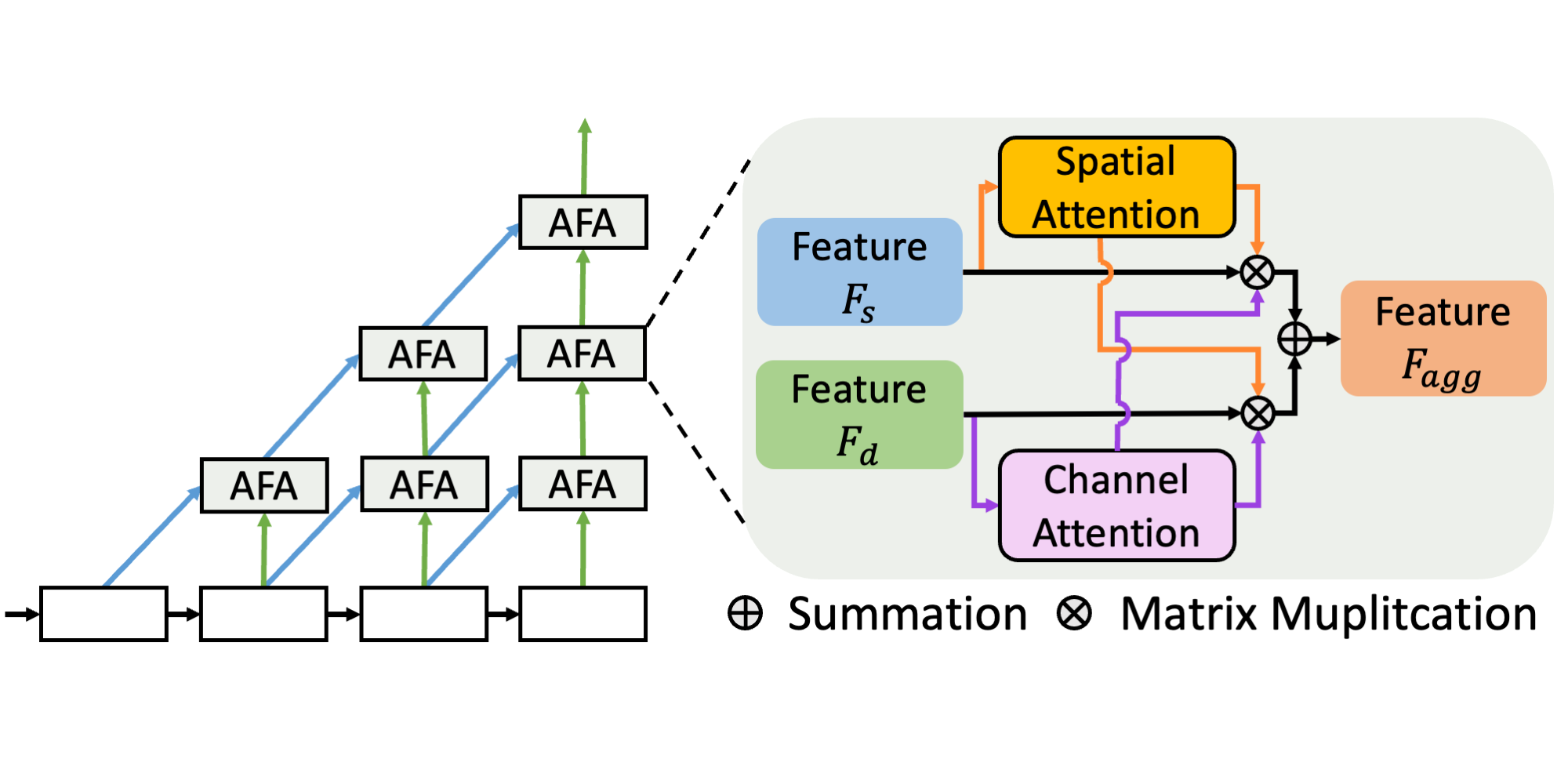

We propose schemes of deep layer aggregation in contrast to shallow aggregation.

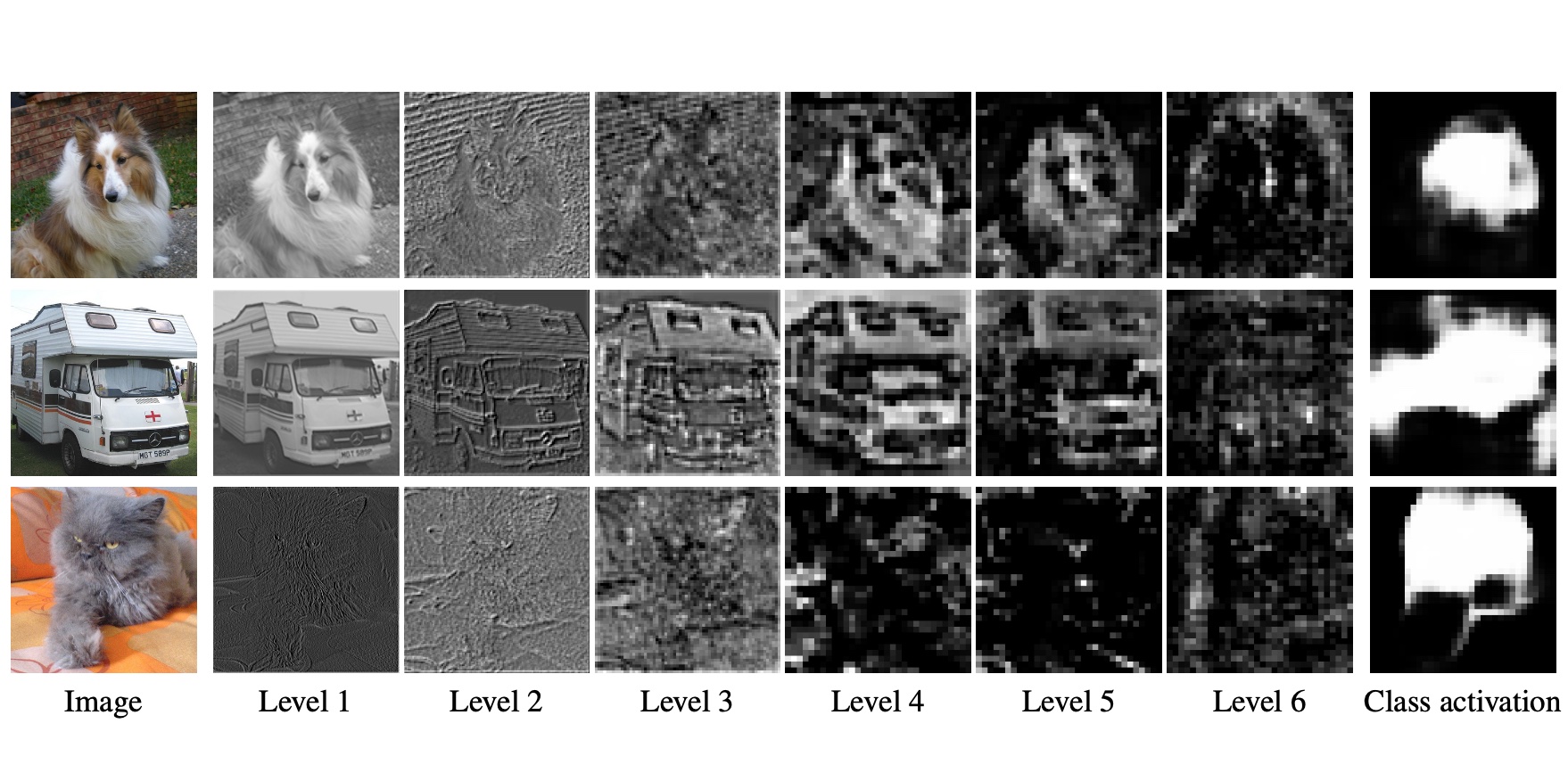

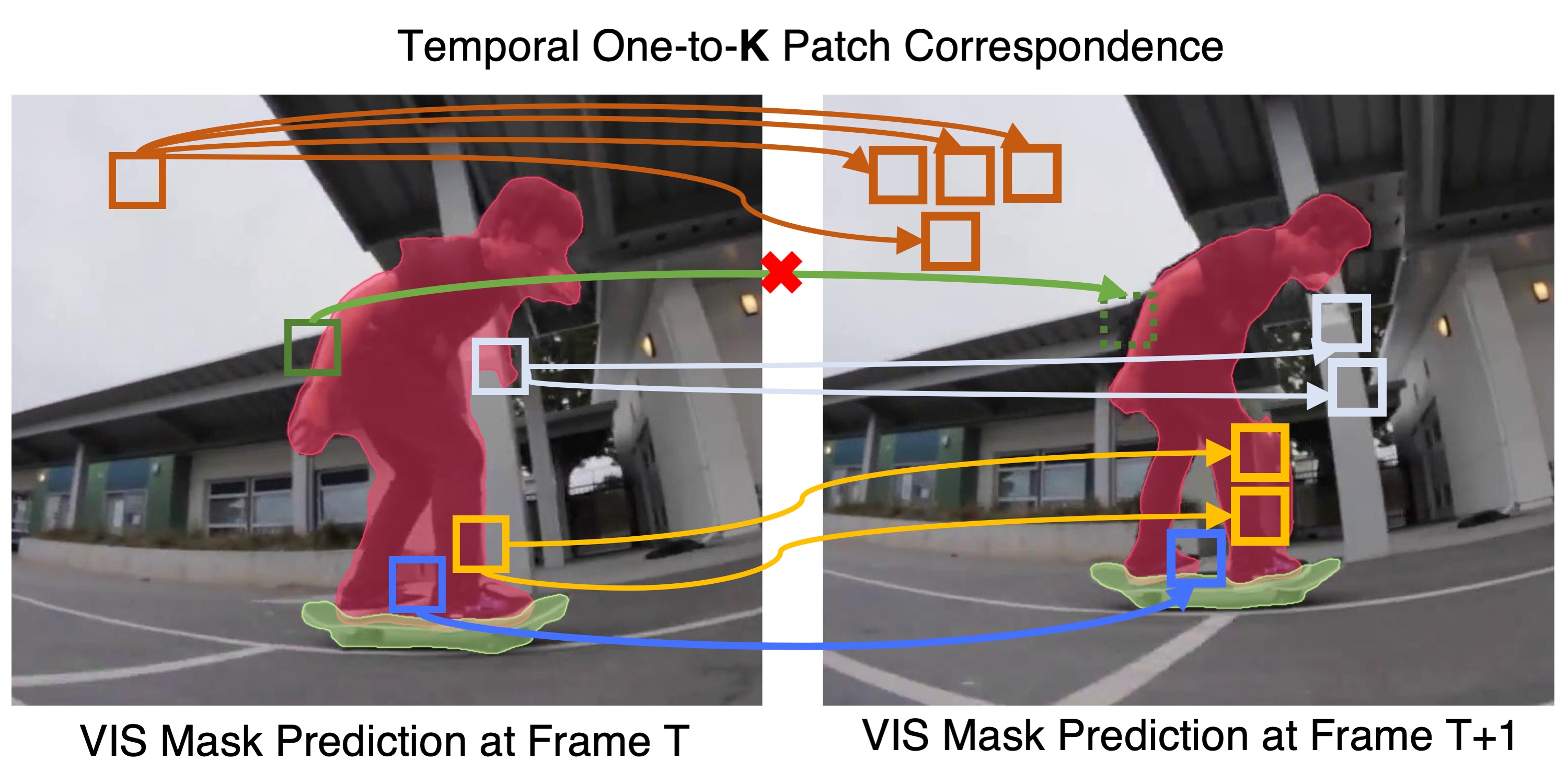

Deep layer aggregation learns to better extract the full spectrum of semantic and spatial information from a network. Iterative connections join neighboring stages to progressively deepen and spatially refine the representation. Hierarchical connections cross stages with trees that span the spectrum of layers to better propagate features and gradients.

Interpolation by iterative deep aggregation. Stages are fused from shallow to deep to make a progressively deeper and higher resolution decoder.

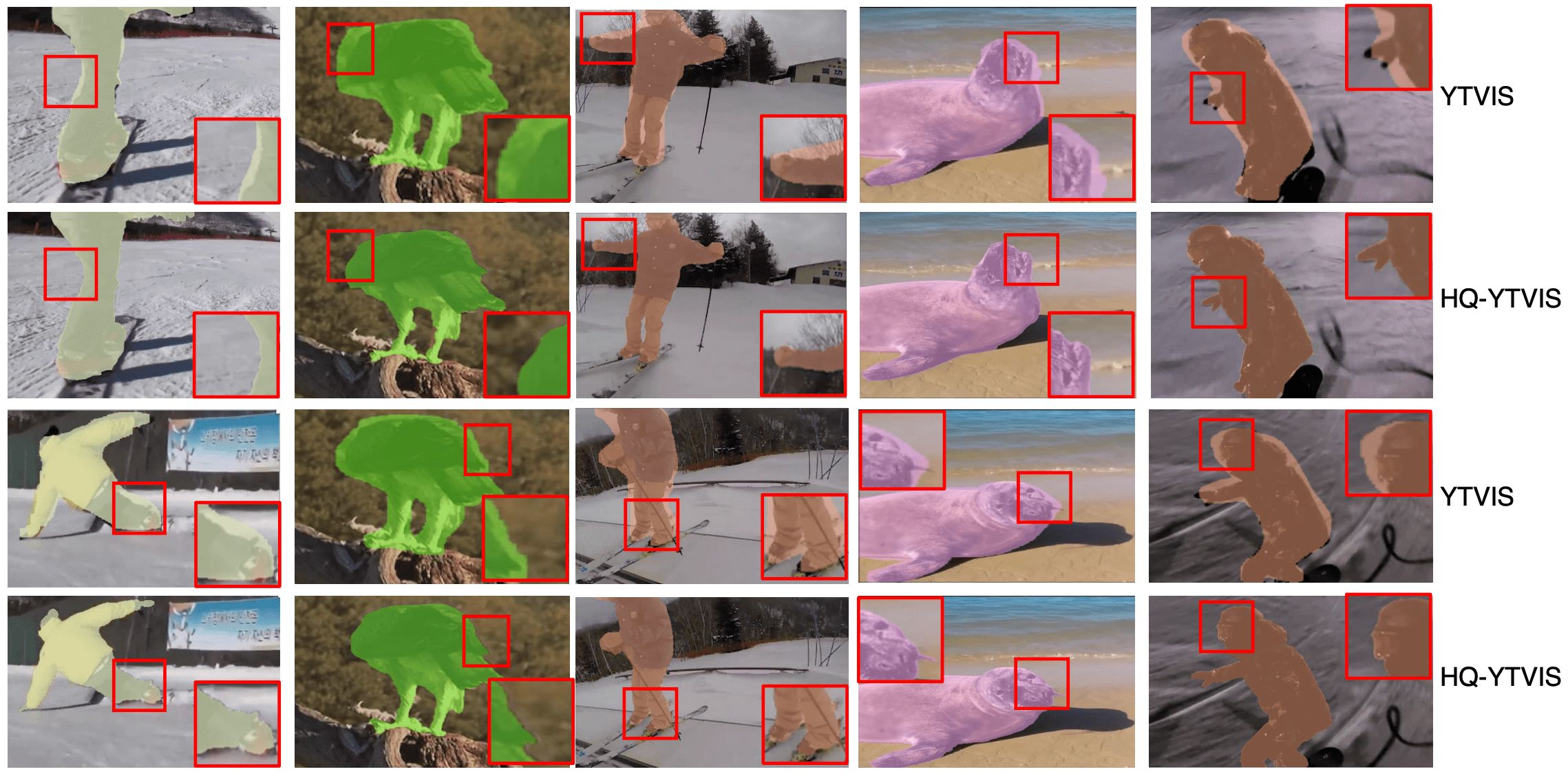

Results

DLA achieves great parameter and computation tradeoff.

It also does well in fine-grained image classification tasks.

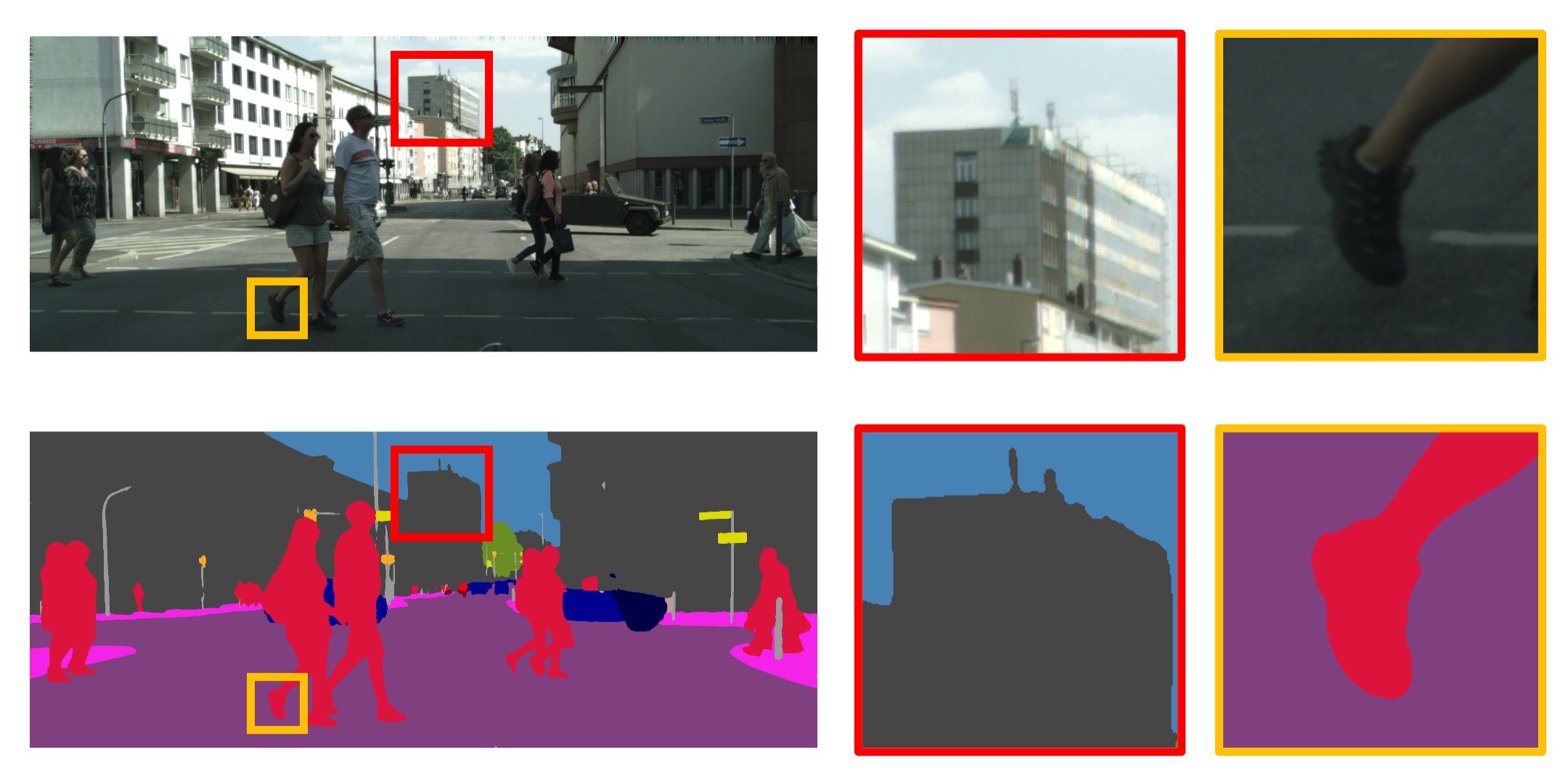

DLA-Up works well on the semantic segmentation task. It can achieve good balance between global context and local details.

We also obtain state-of-the-art results on boundary prediction.

Paper

| Fisher Yu, Dequan Wang, Evan Shelhamer, Trevor Darrell Deep Layer Aggregation CVPR 2018 Oral |

Code

github.com/ucbdrive/dla

DLA is also supported in the following popular packages

It has been notably used in the following popular models

Citation

@inproceedings{yu2018deep,

title={Deep layer aggregation},

author={Yu, Fisher and Wang, Dequan and Shelhamer, Evan and Darrell, Trevor},

booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

year={2018}

}