Abstract

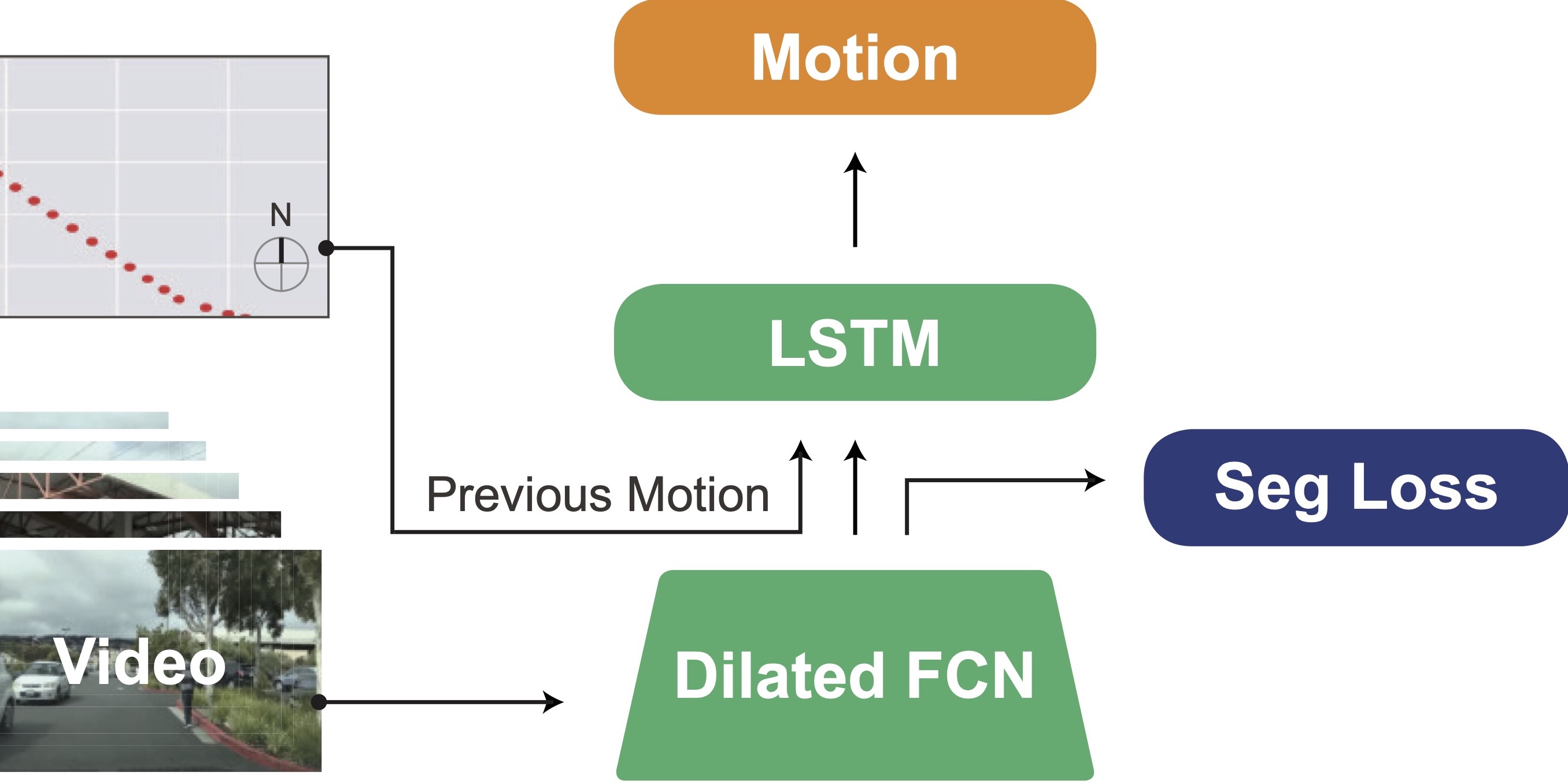

Robust perception-action models should be learned from training data with diverse visual appearances and realistic behaviors, yet current approaches to deep visuomotor policy learning have been generally limited to in-situ models learned from a single vehicle or a simulation environment. We advocate learning a generic vehicle motion model from large scale crowd-sourced video data, and develop an end-to-end trainable architecture for learning to predict a distribution over future vehicle egomotion from instantaneous monocular camera observations and previous vehicle state. Our model incorporates a novel FCN-LSTM architecture, which can be learned from large-scale crowd-sourced vehicle action data, and leverages available scene segmentation side tasks to improve performance under a privileged learning paradigm.

Paper

| Huazhe Xu, Yang Gao, Fisher Yu, Trevor Darrell End-to-end Learning of Driving Models from Large-scale Video Datasets CVPR 2017 Oral |

Code

github.com/gy20073/BDD_Driving_Model

Citation

@inproceedings{xu2017end,

title={End-to-end learning of driving models from large-scale video datasets},

author={Xu, Huazhe and Gao, Yang and Yu, Fisher and Darrell, Trevor},

booktitle={Proceedings of the IEEE conference on computer vision and pattern recognition},

pages={2174--2182},

year={2017}

}