Abstract

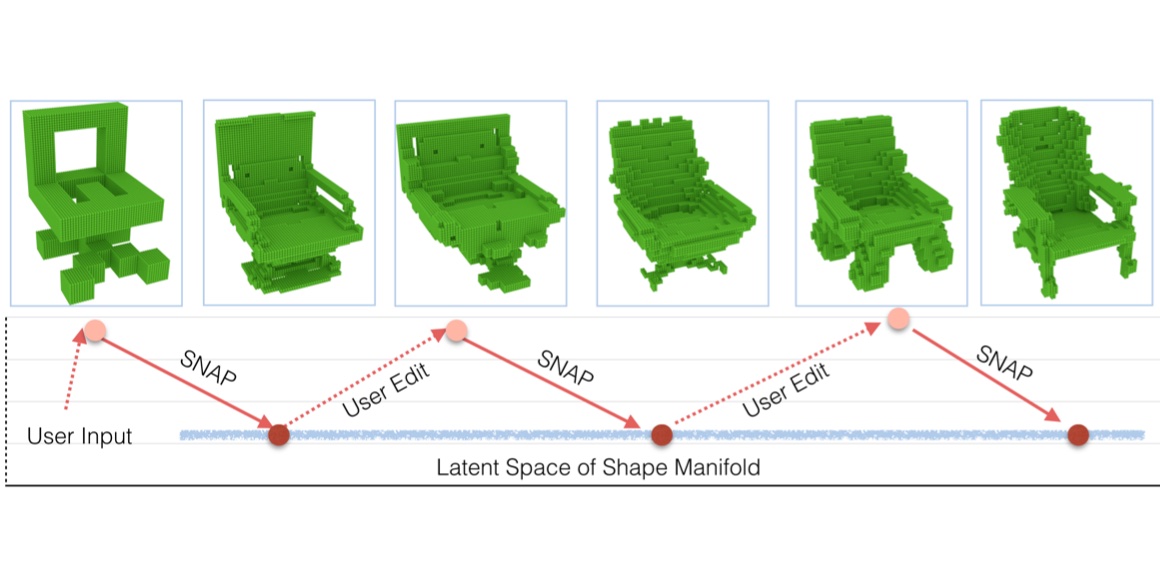

This paper proposes the idea of using a generative adversarial network (GAN) to assist a novice user in designing real-world shapes with a simple interface. The user edits a voxel grid with a painting interface (like Minecraft). Yet, at any time, he/she can execute a SNAP command, which projects the current voxel grid onto a latent shape manifold with a learned projection operator and then generates a similar, but more realistic, shape using a learned generator network. Then the user can edit the resulting shape and snap again until he/she is satisfied with the result. The main advantage of this approach is that the projection and generation operators assist novice users to create 3D models characteristic of a background distribution of object shapes, but without having to specify all the details. The core new research idea is to use a GAN to support this application. 3D GANs have previously been used for shape generation, interpolation, and completion, but never for interactive modeling. The new challenge for this application is to learn a projection operator that takes an arbitrary 3D voxel model and produces a latent vector on the shape manifold from which a similar and realistic shape can be generated. We develop algorithms for this and other steps of the SNAP processing pipeline and integrate them into a simple modeling tool. Experiments with these algorithms and tool suggest that GANs provide a promising approach to computer-assisted interactive modeling.

Paper

| Jerry Liu, Fisher Yu, Thomas Funkhouser Interactive 3D Modeling with a Generative Adversarial Network 3DV 2017 |

Citation

@inproceedings{liu2017interactive,

title={Interactive 3D modeling with a generative adversarial network},

author={Liu, Jerry and Yu, Fisher and Funkhouser, Thomas},

booktitle={2017 International Conference on 3D Vision (3DV)},

pages={126--134},

year={2017},

organization={IEEE}

}