Abstract

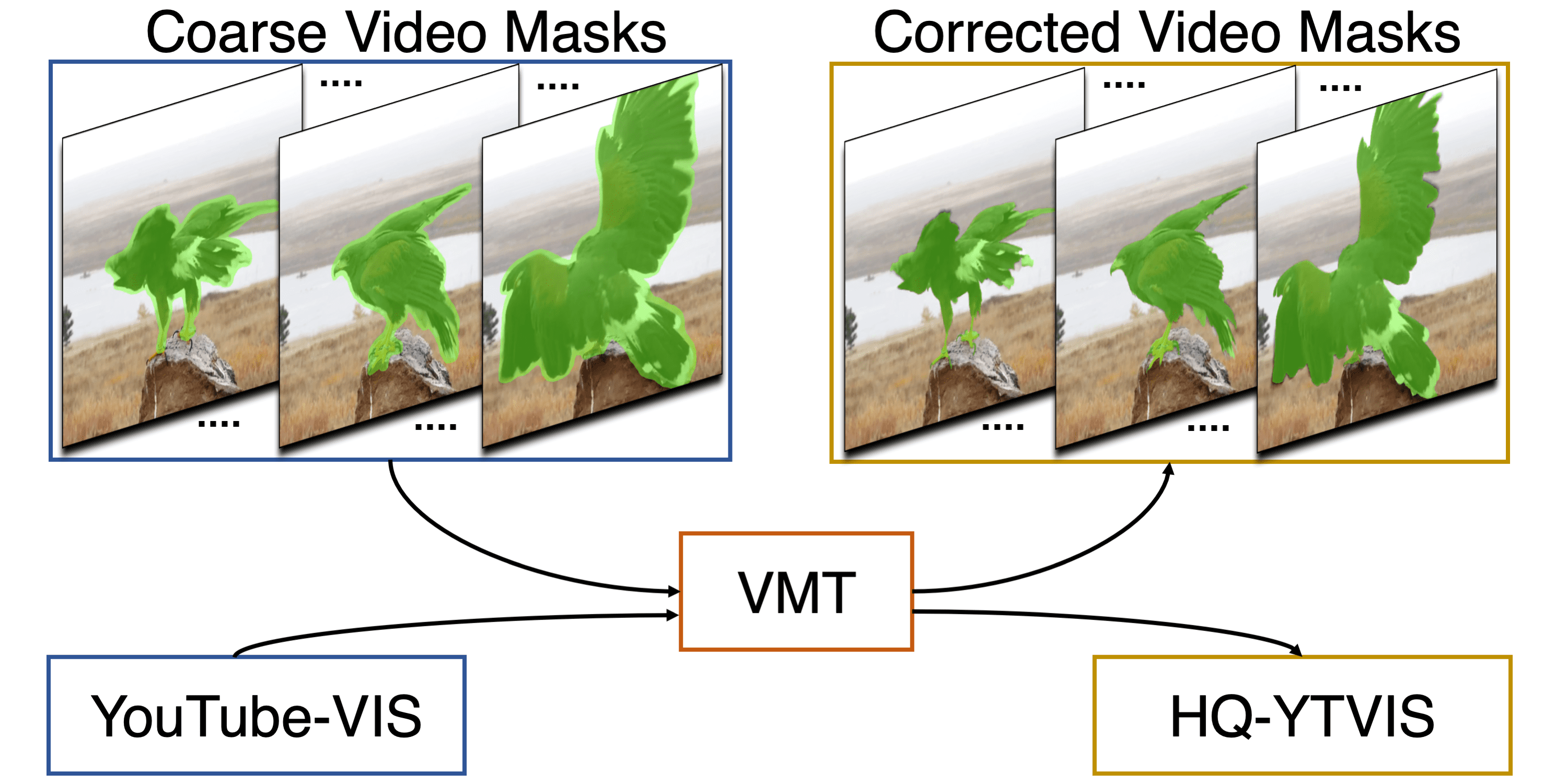

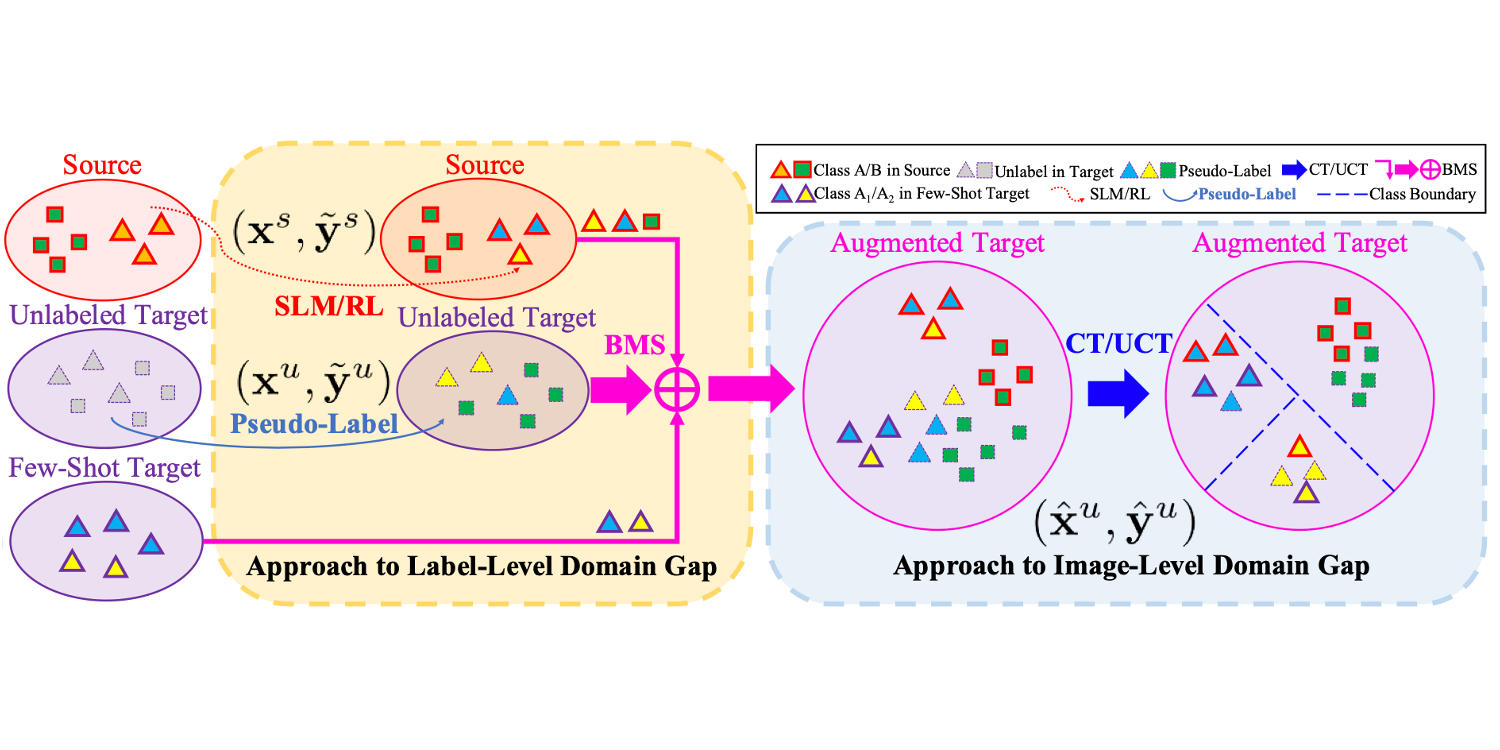

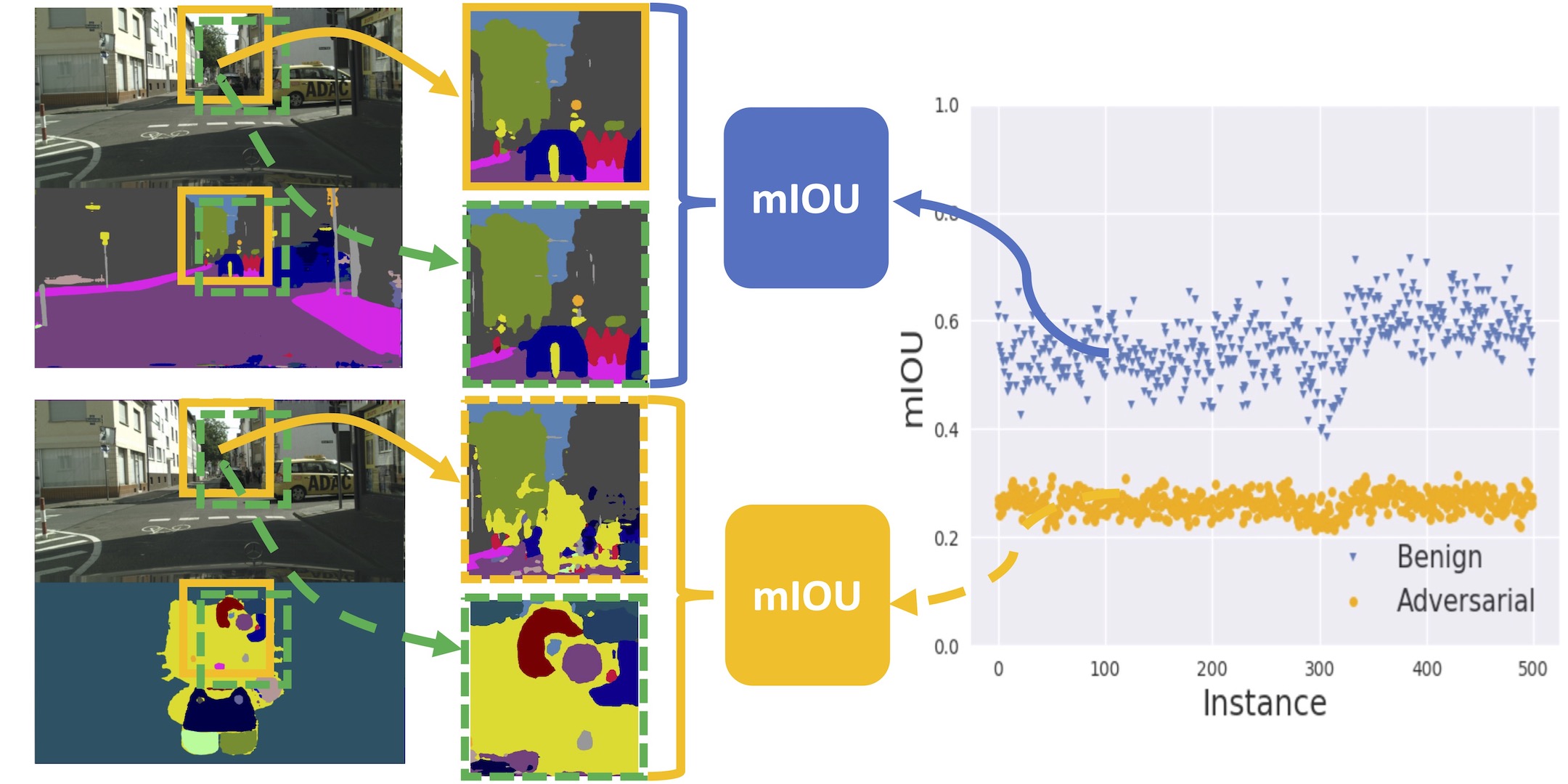

The recent advancement in Video Instance Segmentation (VIS) has largely been driven by the use of deeper and increasingly data-hungry transformer-based models. However, video masks are tedious and expensive to annotate, limiting the scale and diversity of existing VIS datasets. In this work, we aim to remove the mask-annotation requirement. We propose MaskFreeVIS, achieving highly competitive VIS performance, while only using bounding box annotations for the object state. We leverage the rich temporal mask consistency constraints in videos by introducing the Temporal KNN-patch Loss (TK-Loss), providing strong mask supervision without any labels. Our TK-Loss finds one-to-many matches across frames, through an efficient patch-matching step followed by a K-nearest neighbor selection. A consistency loss is then enforced on the found matches. Our mask-free objective is simple to implement, has no trainable parameters, is computationally efficient, yet outperforms baselines employing, e.g., state-of-the-art optical flow to enforce temporal mask consistency. We validate MaskFreeVIS on the YouTube-VIS 2019/2021, OVIS and BDD100K MOTS benchmarks. The results clearly demonstrate the efficacy of our method by drastically narrowing the gap between fully and weakly-supervised VIS performance.

Highlight

Video Intro

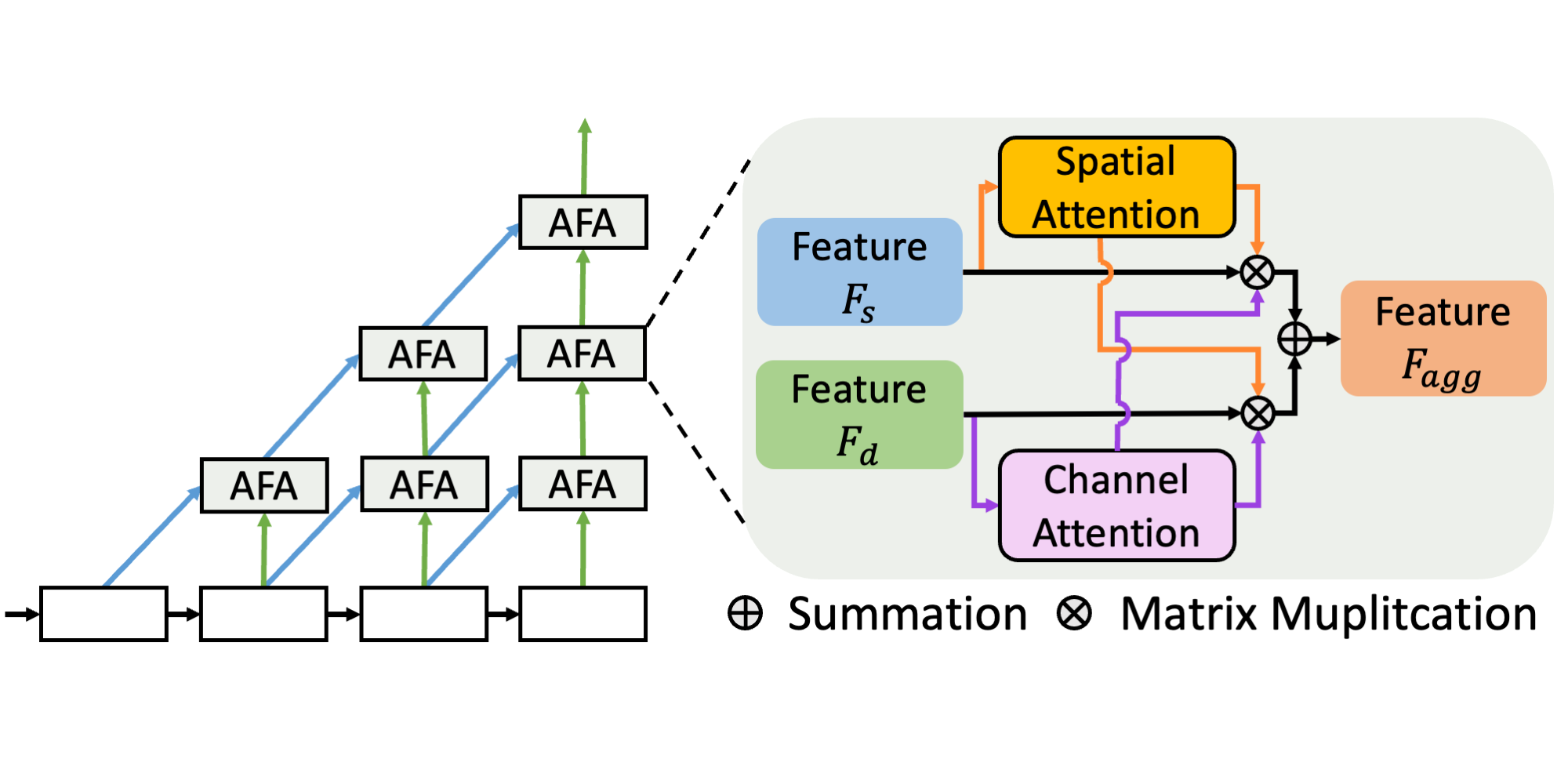

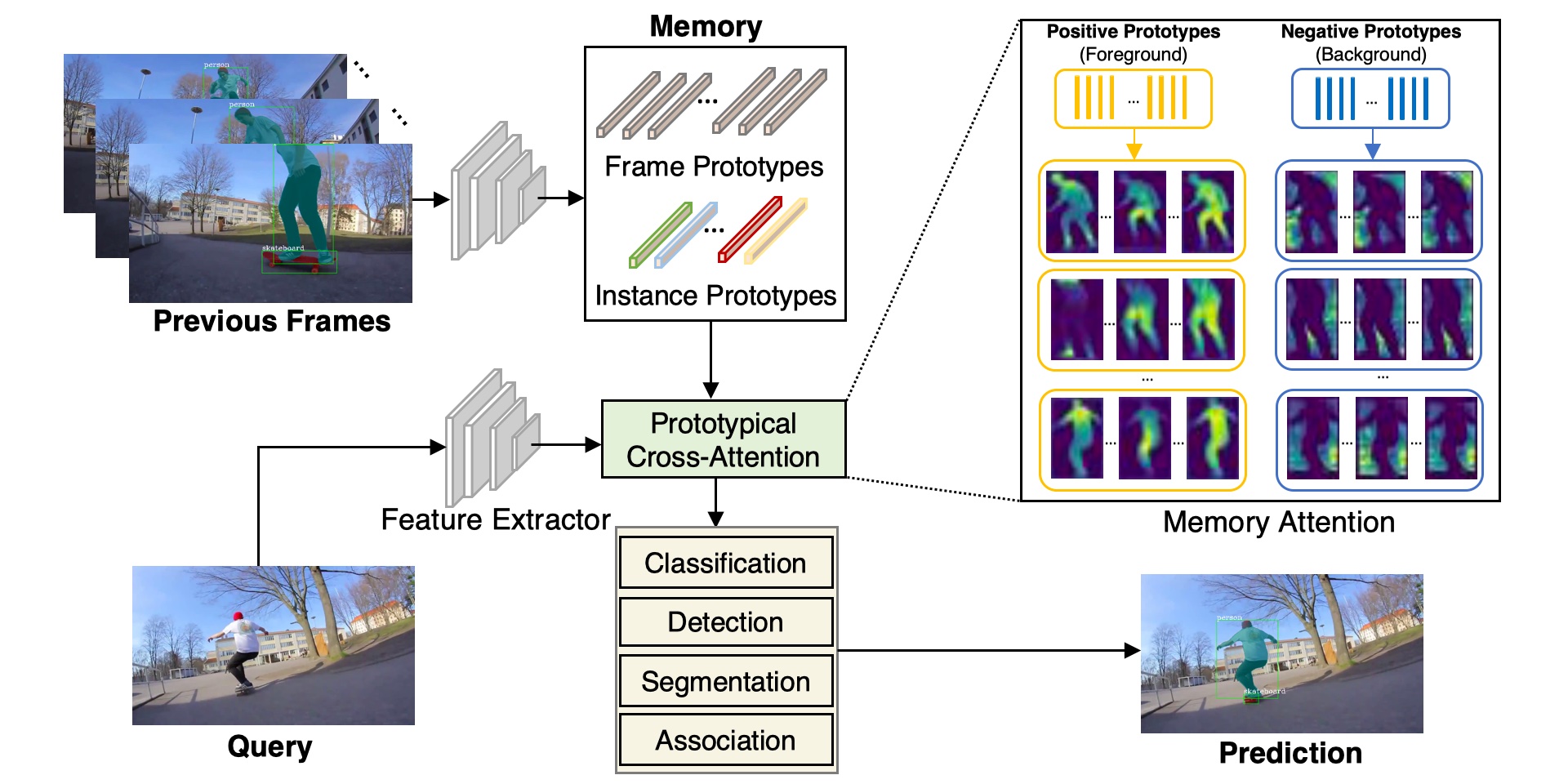

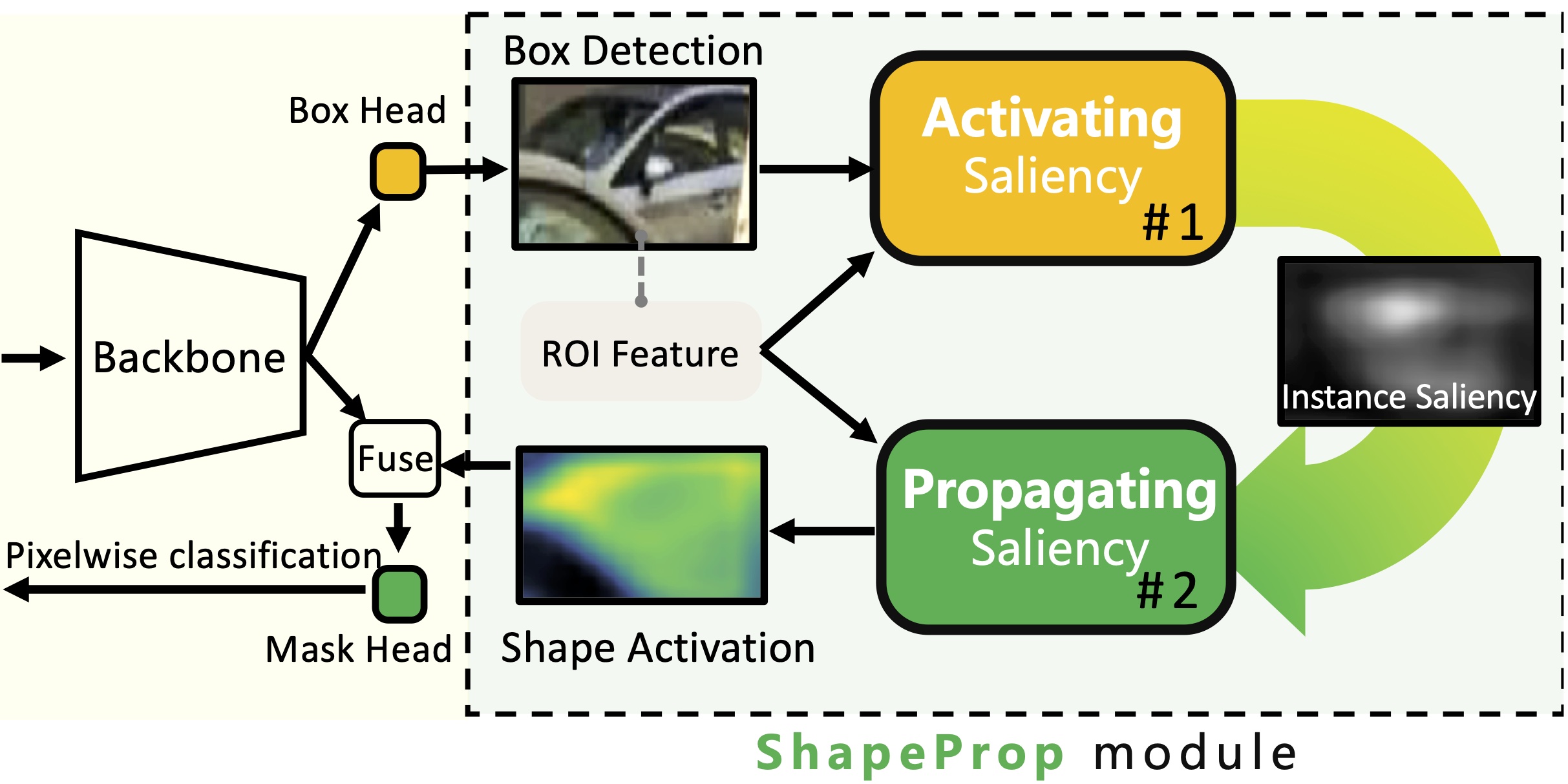

Method

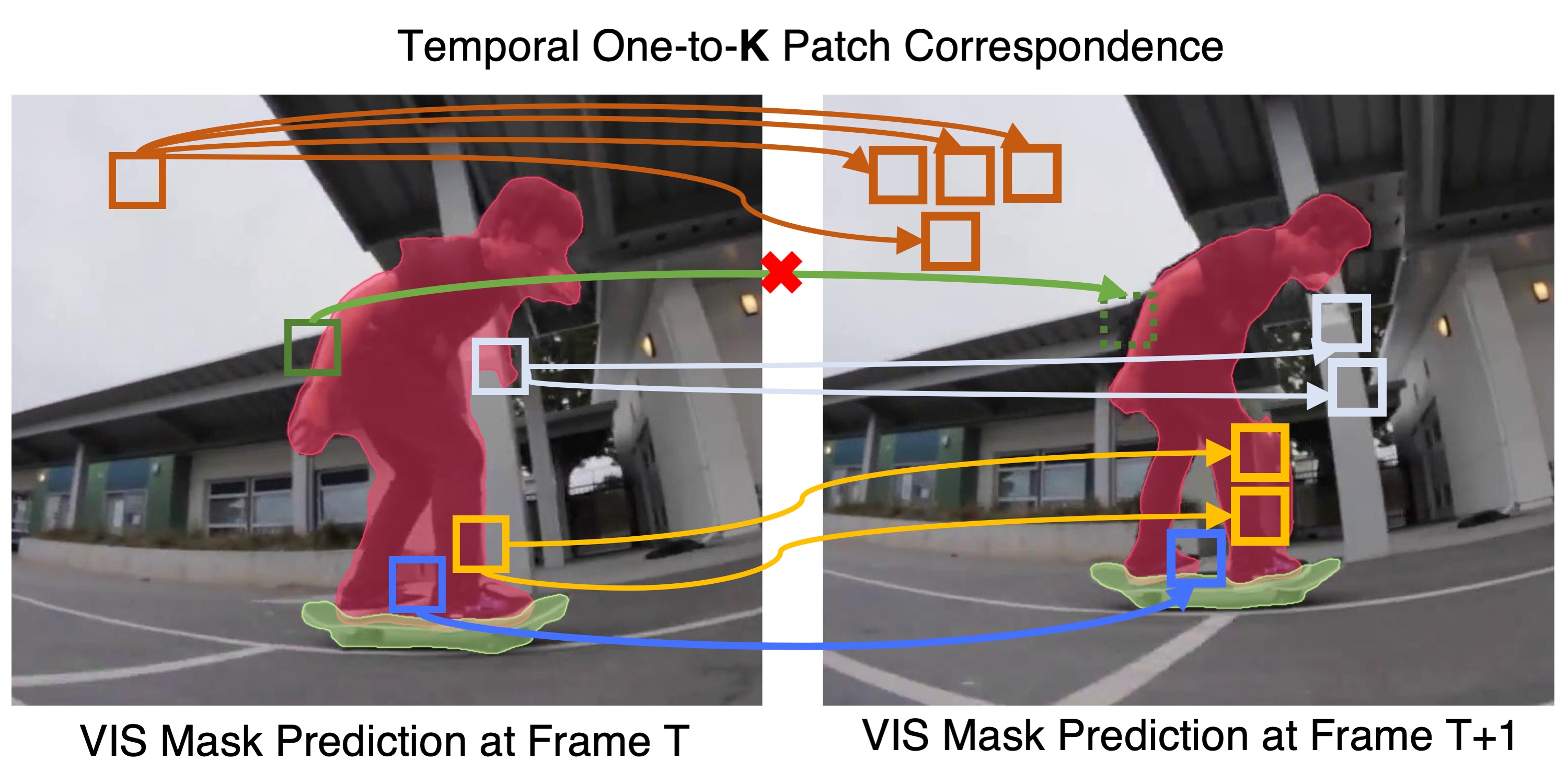

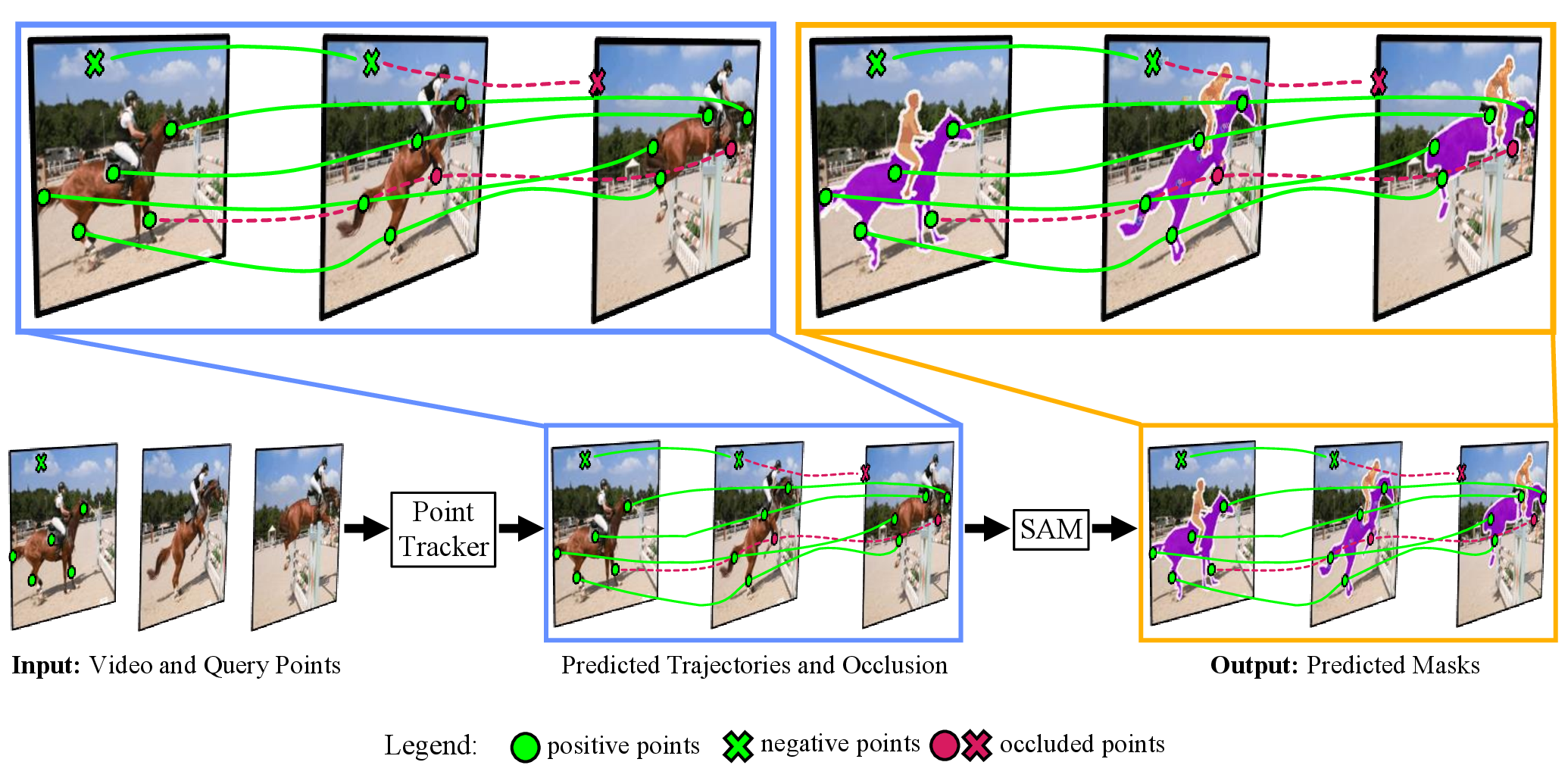

TK-Loss has four steps: 1) Patch Candidate Extraction: Patch candidates searching across frames with radius R. 2) Temporal KNN-Matching: Match k high-confidence candidates by patch affinities. 3) KNN-Consistency Loss: Enforce mask consistency objective among the matches. 4) Cyclic Tube Connection: Temporal loss aggregation.

TK-Loss has four steps: 1) Patch Candidate Extraction: Patch candidates searching across frames with radius R. 2) Temporal KNN-Matching: Match k high-confidence candidates by patch affinities. 3) KNN-Consistency Loss: Enforce mask consistency objective among the matches. 4) Cyclic Tube Connection: Temporal loss aggregation.

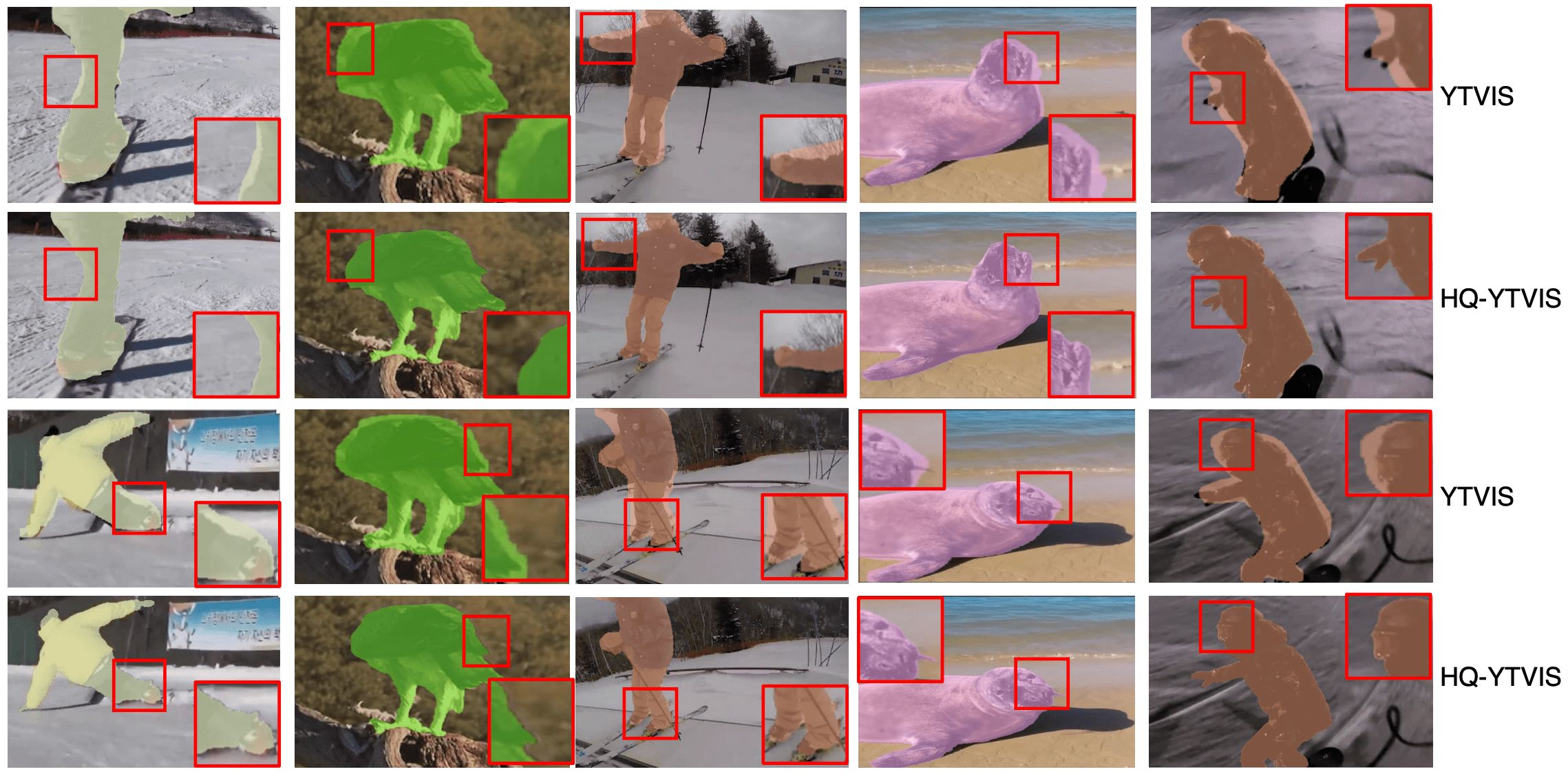

Video Results Comparison

Web Videos Results of MaskFreeVIS

Paper

| Lei Ke, Martin Danelljan, Henghui Ding, Yu-Wing Tai, Chi-Keung Tang, Fisher Yu Mask-Free Video Instance Segmentation CVPR 2023 |

Code

The code and models of our MaskFreeVIS:

github.com/SysCV/maskfreevis

Citation

@inproceedings{maskfreevis,

author={Ke, Lei and Danelljan, Martin and Ding, Henghui and Tai, Yu-Wing and Tang, Chi-Keung and Yu, Fisher},

title={Mask-Free Video Instance Segmentation},

booktitle = {CVPR},

year = {2023}

}