Abstract

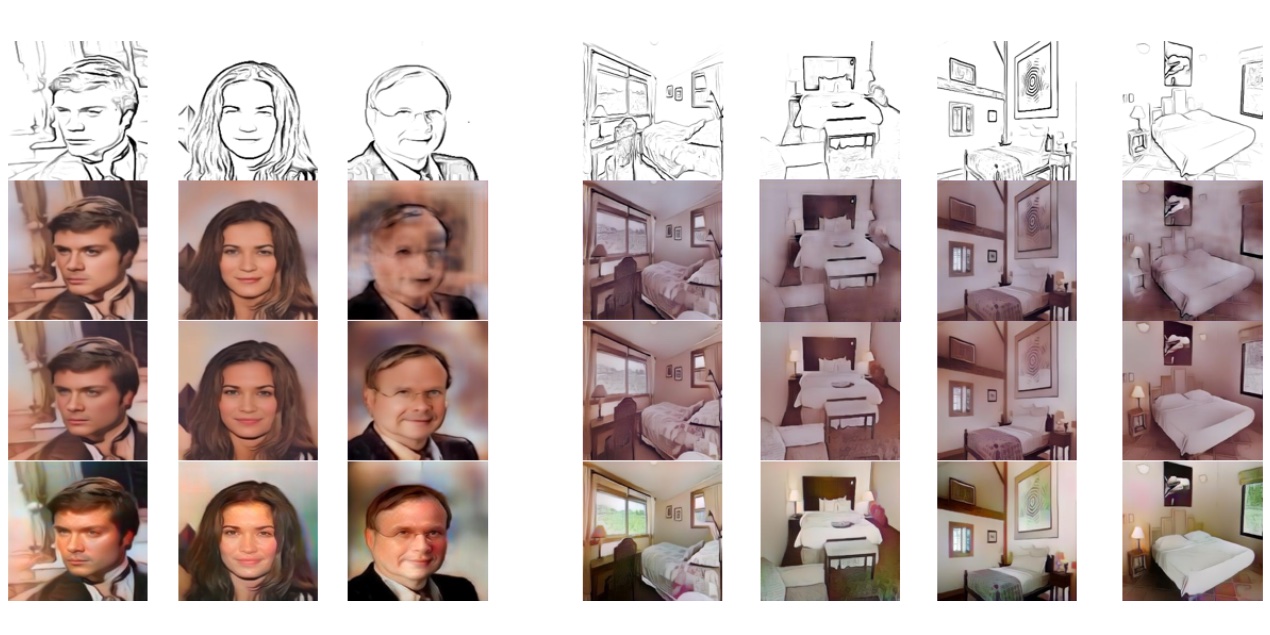

This paper introduces an automatic method for editing a portrait photo so that the subject appears to be wearing makeup in the style of another person in a reference photo. Our unsupervised learning approach relies on a new framework of cycle-consistent generative adversarial networks. Different from the image domain transfer problem, our style transfer problem involves two asymmetric functions: a forward function encodes example-based style transfer, whereas a backward function removes the style. We construct two coupled networks to implement these functions – one that transfers makeup style and a second that can remove makeup – such that the output of their successive application to an input photo will match the input. The learned style network can then quickly apply an arbitrary makeup style to an arbitrary photo. We demonstrate the effectiveness on a broad range of portraits and styles.

Code

Unfortunately, the code is not publicly available because part of the code is proprietary.

Paper

| Huiwen Chang, Jingwan Lu, Fisher Yu, Adam Finkelstein PairedCycleGAN: Asymmetric Style Transfer for Applying and Removing Makeup CVPR 2018 |

Citation

@InProceedings{Chang_2018_CVPR,

author = {Chang, Huiwen and Lu, Jingwan and Yu, Fisher and Finkelstein, Adam},

title = {PairedCycleGAN: Asymmetric Style Transfer for Applying and Removing Makeup},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2018}

}