Abstract

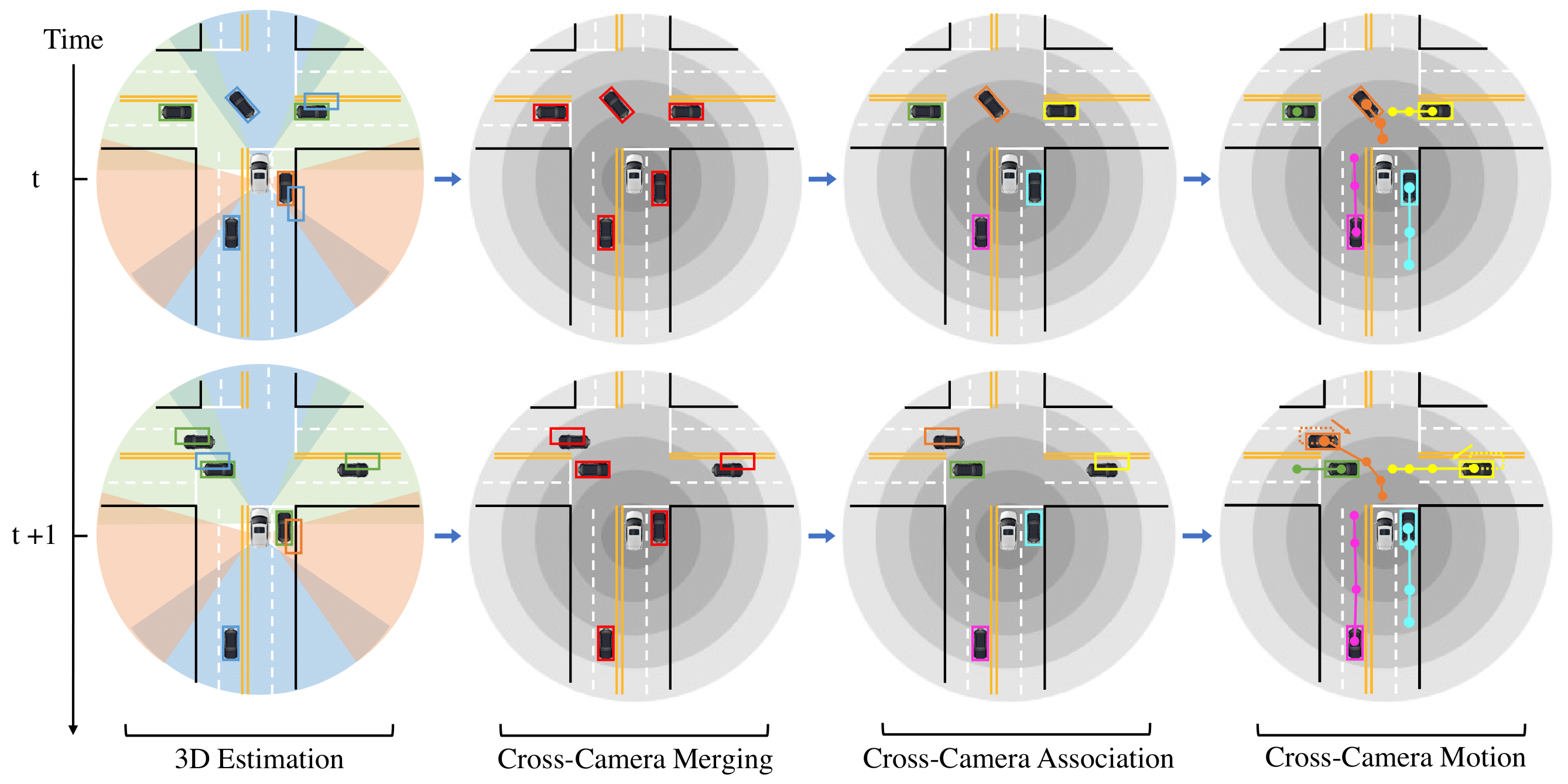

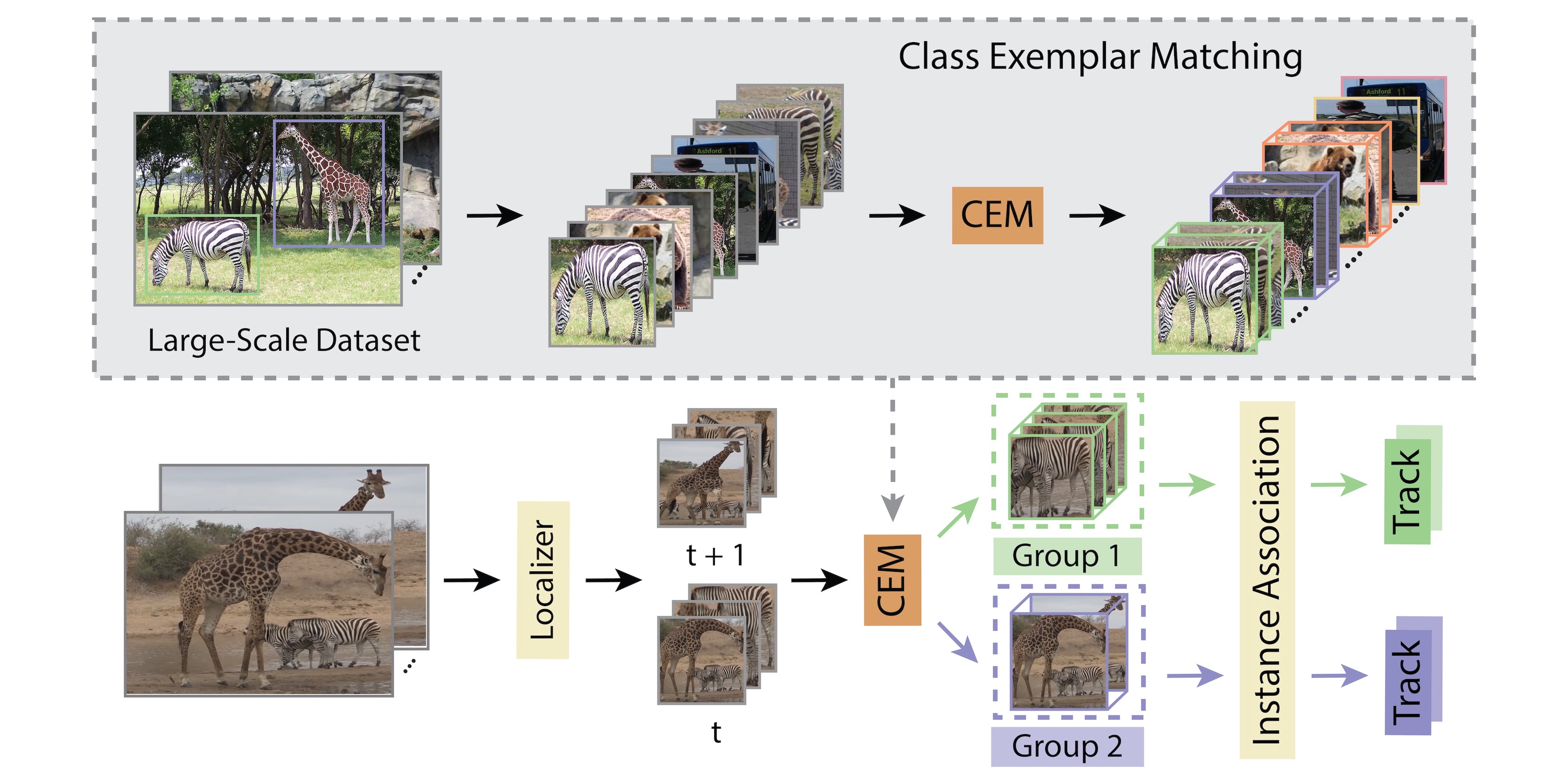

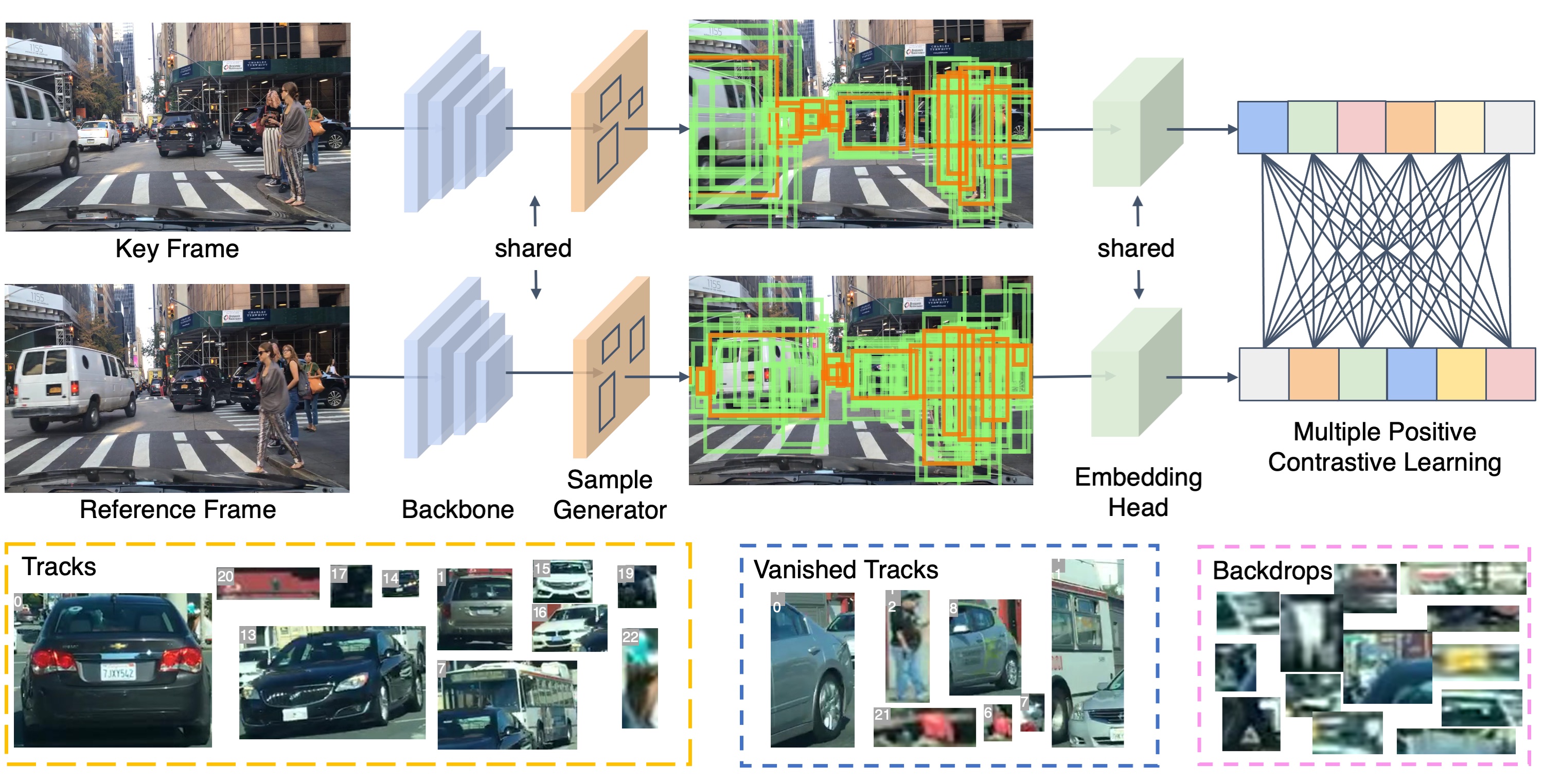

A reliable and accurate 3D tracking framework is essential for predicting future locations of surrounding objects and planning the observer’s actions in numerous applications such as autonomous driving. We propose a framework that can effectively associate moving objects over time and estimate their full 3D bounding box information from a sequence of 2D images captured on a moving platform. The object association leverages quasi-dense similarity learning to identify objects in various poses and viewpoints with appearance cues only. After initial 2D association, we further utilize 3D bounding boxes depth-ordering heuristics for robust instance association and motion-based 3D trajectory prediction for re-identification of occluded vehicles. In the end, an LSTM-based object velocity learning module aggregates the long-term trajectory information for more accurate motion extrapolation. Experiments on our proposed simulation data and real-world benchmarks, including KITTI, nuScenes, and Waymo datasets, show that our tracking framework offers robust object association and tracking on urban-driving scenarios. On the Waymo Open benchmark, we establish the first camera-only baseline in the 3D tracking and 3D detection challenges. Our quasi-dense 3D tracking pipeline achieves impressive improvements on the nuScenes 3D tracking benchmark with near five times tracking accuracy of the best vision-only submission among all published methods.

Video

Paper

| Hou-Ning Hu, Yung-Hsu Yang, Tobias Fischer, Trevor Darrell, Fisher Yu, Min Sun Monocular Quasi-Dense 3D Object Tracking TPAMI 2022 |

Code

github.com/SysCV/qd-3dt

Citation

@article{Hu2021QD3DT,

author = {Hu, Hou-Ning and Yang, Yung-Hsu and Fischer, Tobias and Darrell, Trevor and Yu, Fisher and Sun, Min},

title = {Monocular Quasi-Dense 3D Object Tracking},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

year = {2022}

}