Abstract

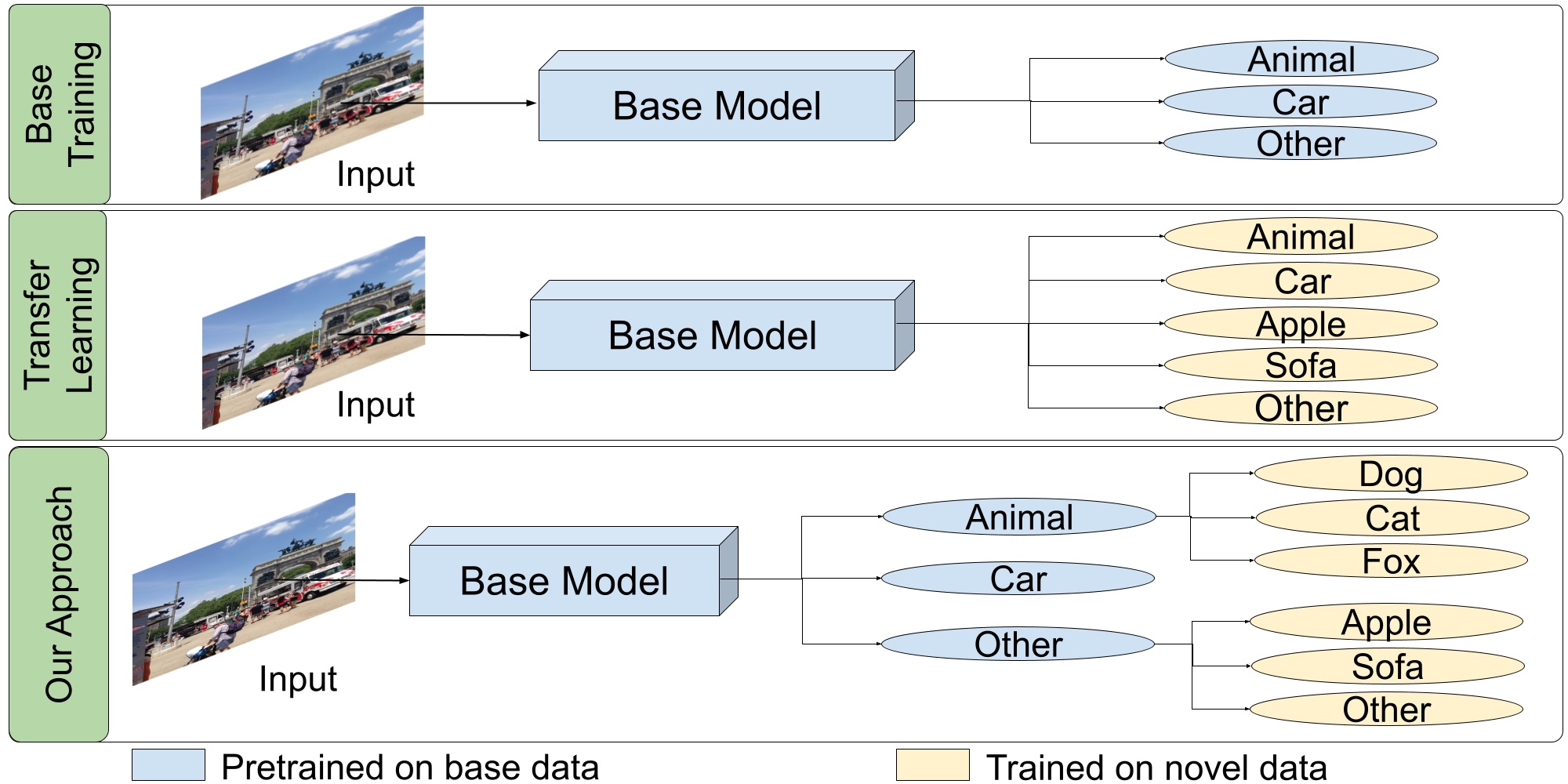

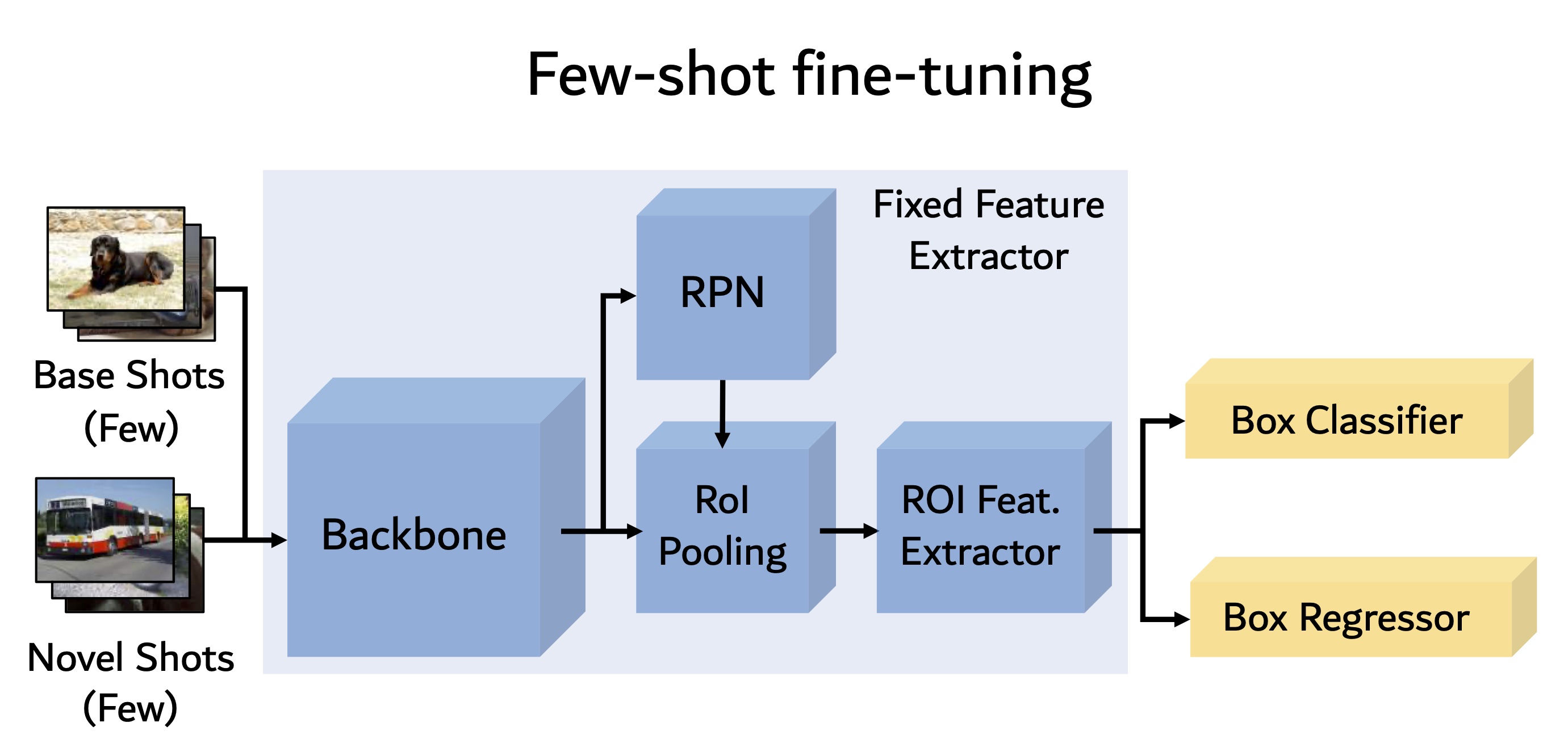

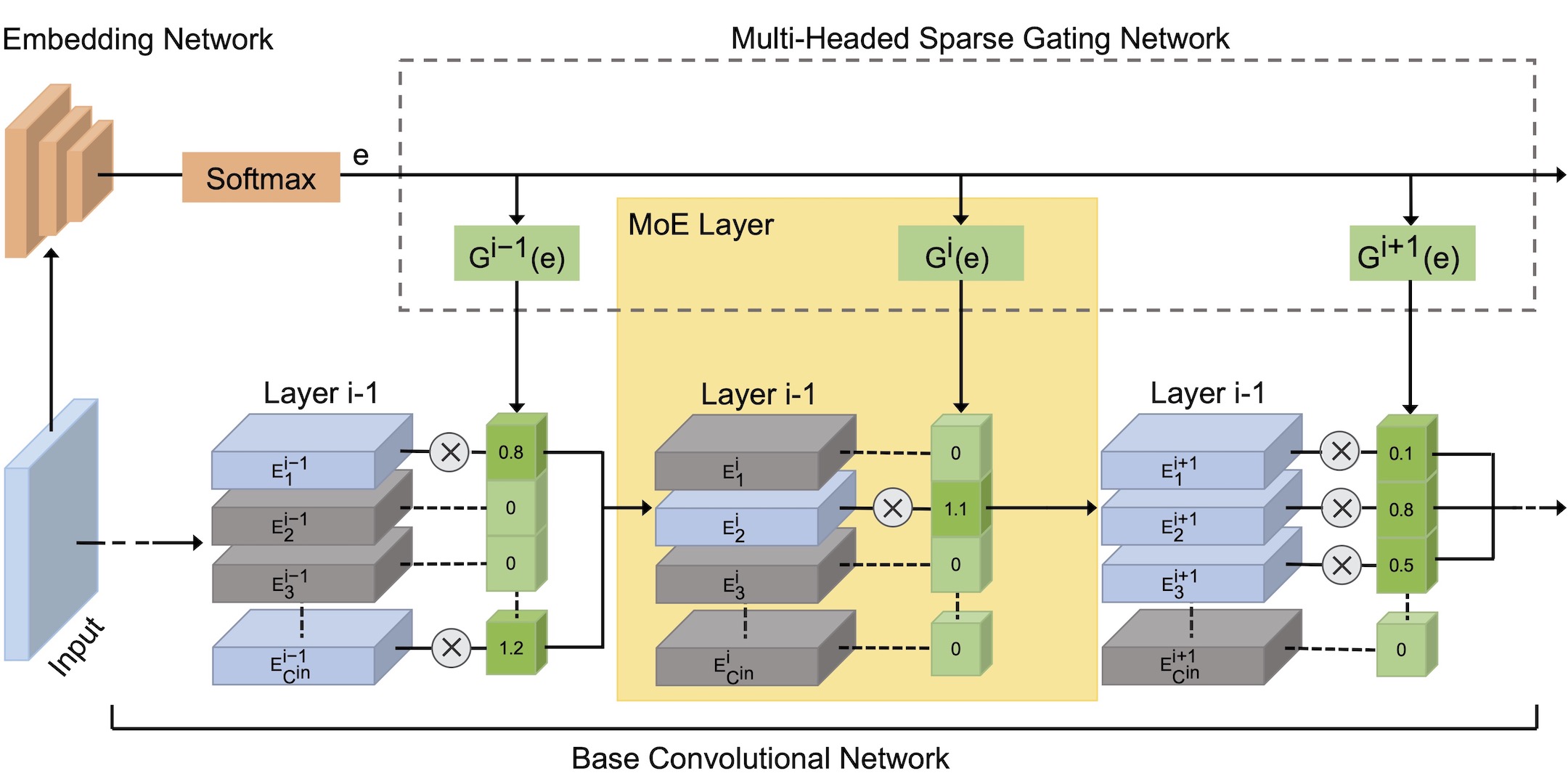

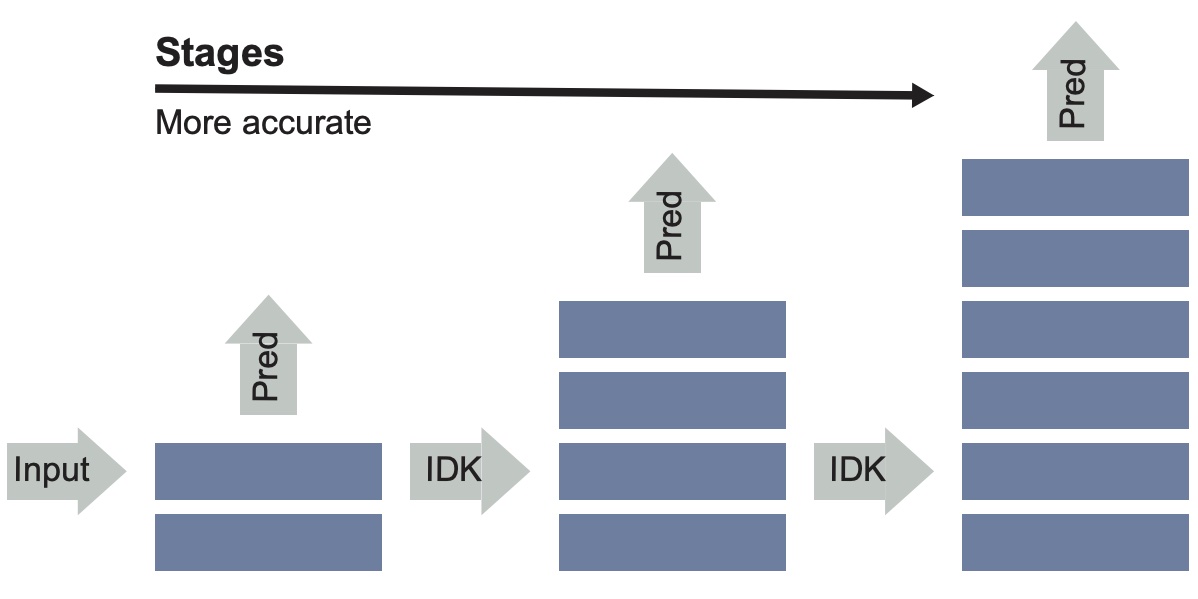

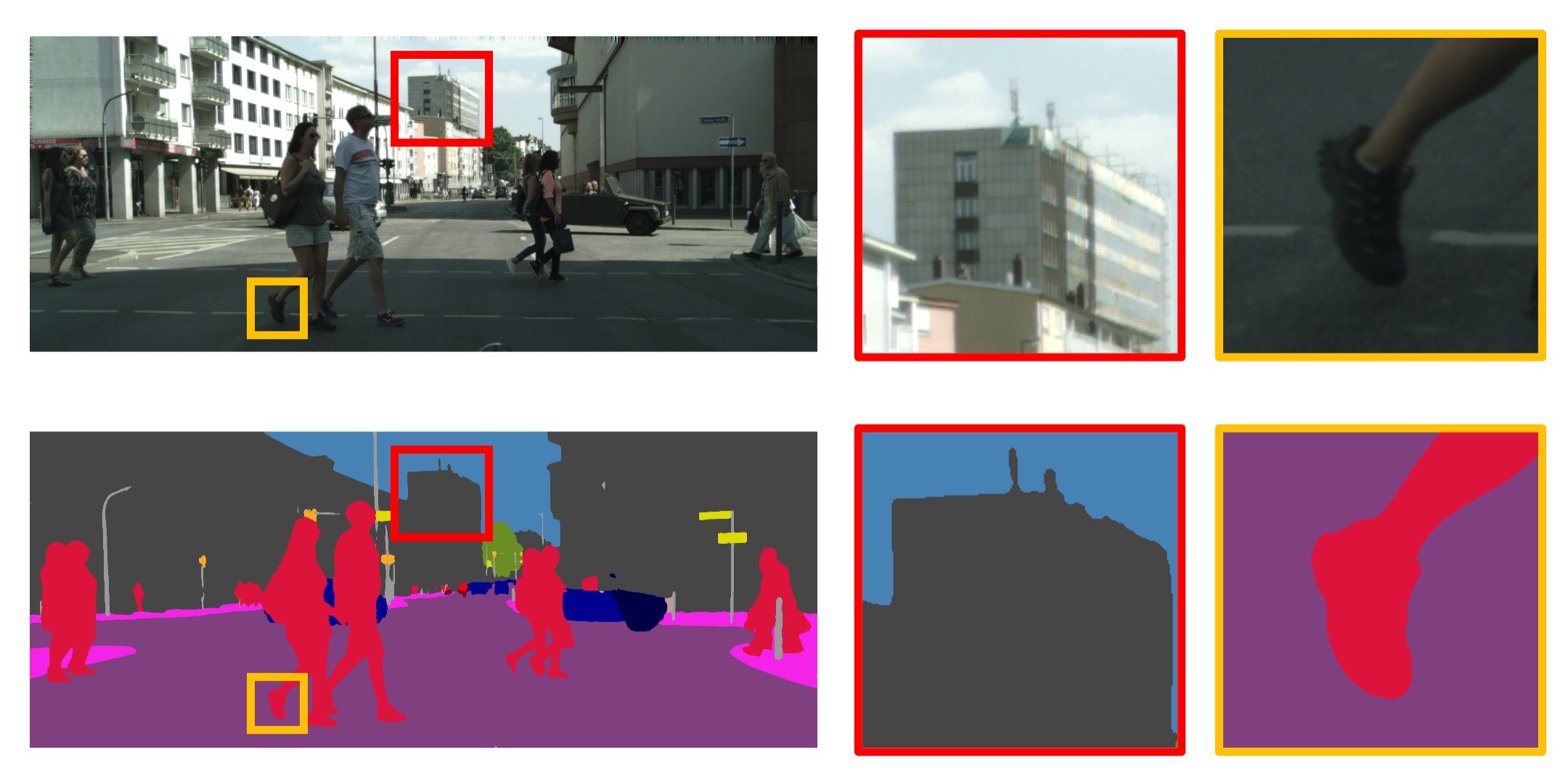

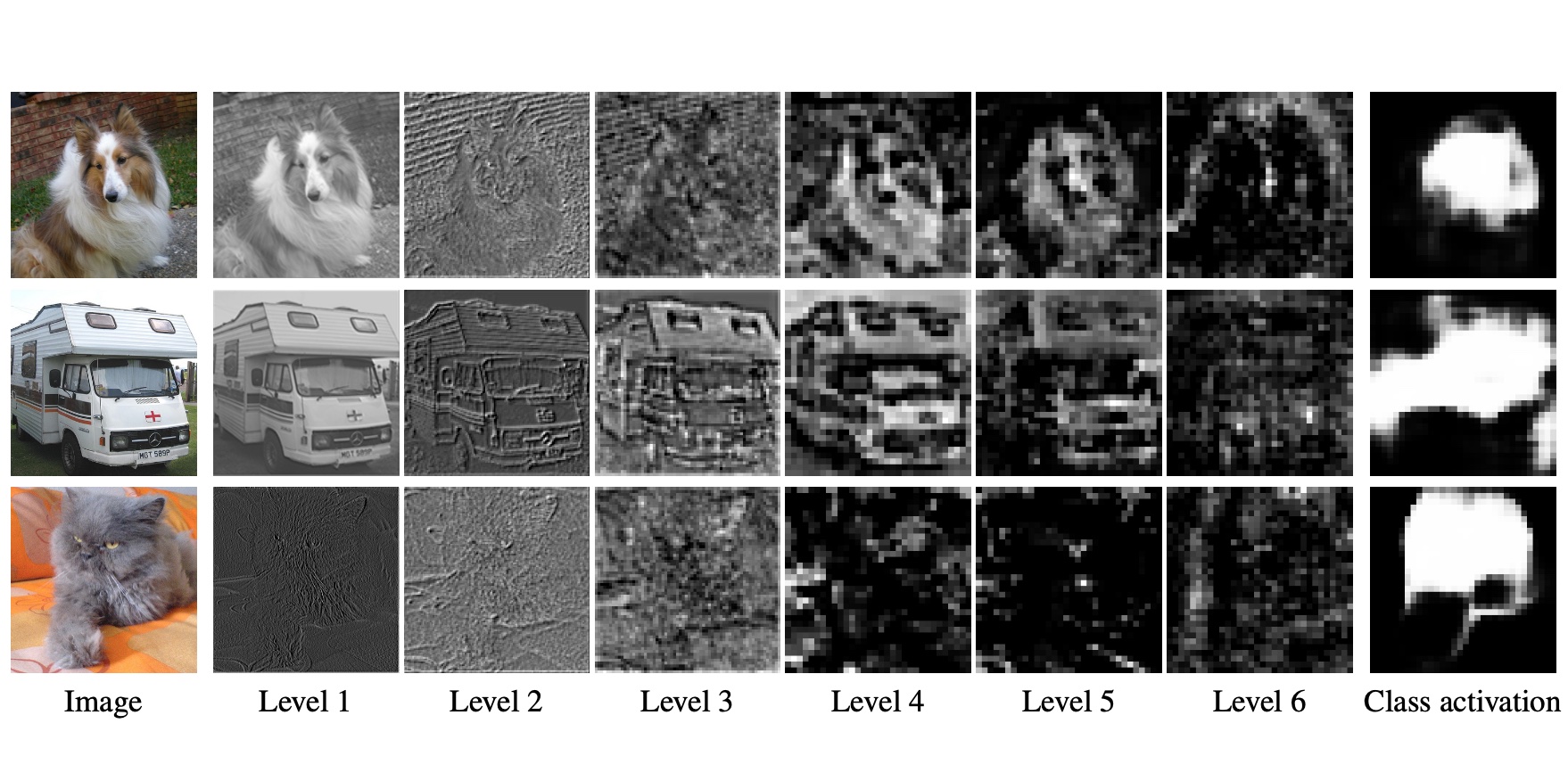

Learning good feature embeddings for images often requires substantial training data. As a consequence, in settings where training data is limited (e.g., few-shot and zero-shot learning), we are typically forced to use a generic feature embedding across various tasks. Ideally, we want to construct feature embeddings that are tuned for the given task. In this work, we propose Task-Aware Feature Embedding Networks (TAFE-Nets) to learn how to adapt the image representation to a new task in a meta learning fashion. Our network is composed of a meta learner and a prediction network. Based on a task input, the meta learner generates parameters for the feature layers in the prediction network so that the feature embedding can be accurately adjusted for that task. We show that TAFE-Net is highly effective in generalizing to new tasks or concepts and evaluate the TAFE-Net on a range of benchmarks in zero-shot and few-shot learning. Our model matches or exceeds the state-of-the-art on all tasks. In particular, our approach improves the prediction accuracy of unseen attribute-object pairs by 4 to 15 points on the challenging visual attribute-object composition task.

Paper

| Xin Wang, Fisher Yu, Ruth Wang, Trevor Darrell, Joseph E. Gonzalez TAFE-Net: Task-Aware Feature Embeddings for Low Shot Learning CVPR 2019 |

Code

github.com/ucbdrive/tafe-net

Citation

@inproceedings{wang2019tafe,

title={TAFE-Net: Task-Aware Feature Embeddings for Low Shot Learning},

author={Wang, Xin and Yu, Fisher and Wang, Ruth and Darrell, Trevor and Gonzalez, Joseph E},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={1831--1840},

year={2019}

}