Abstract

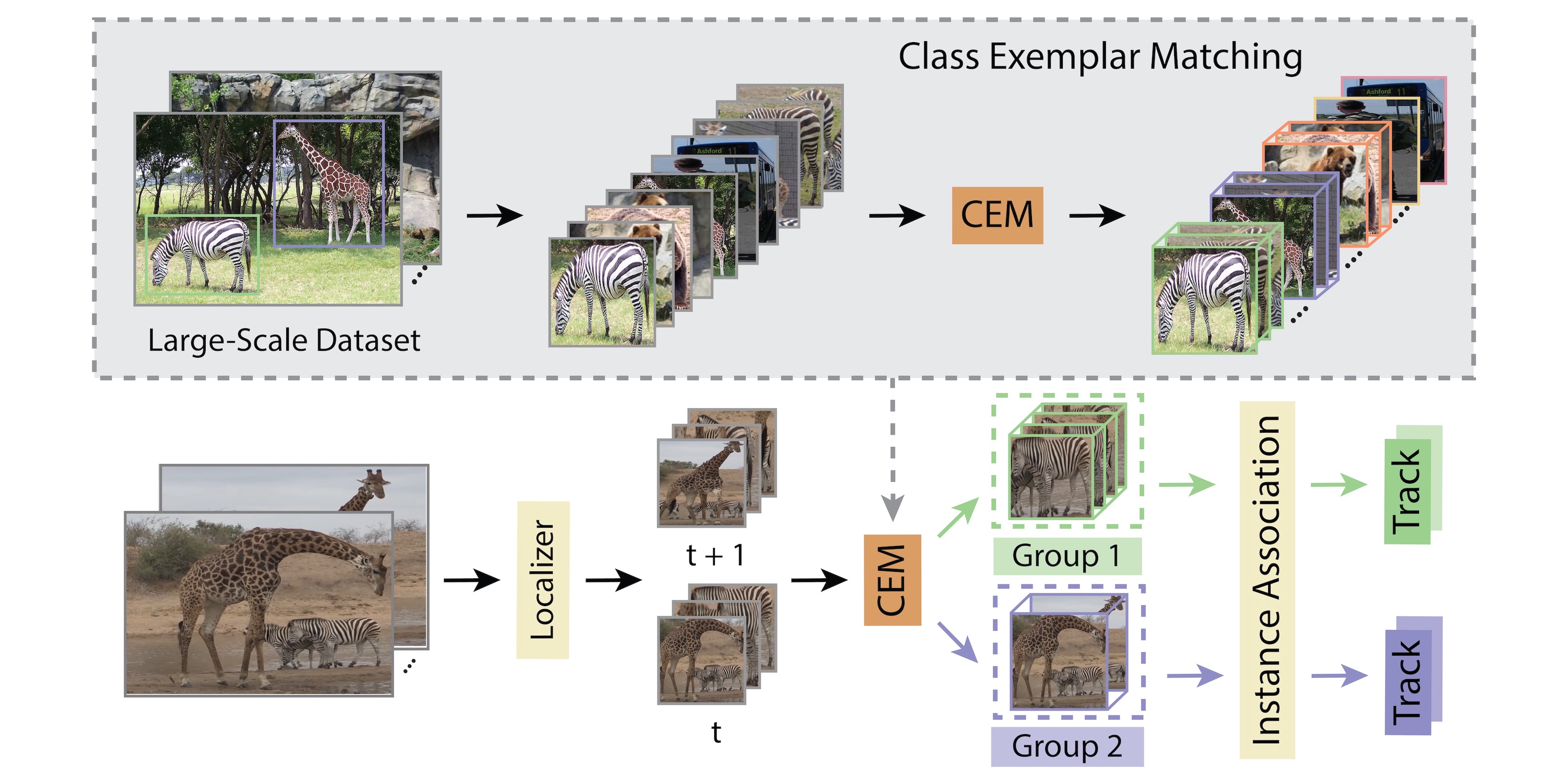

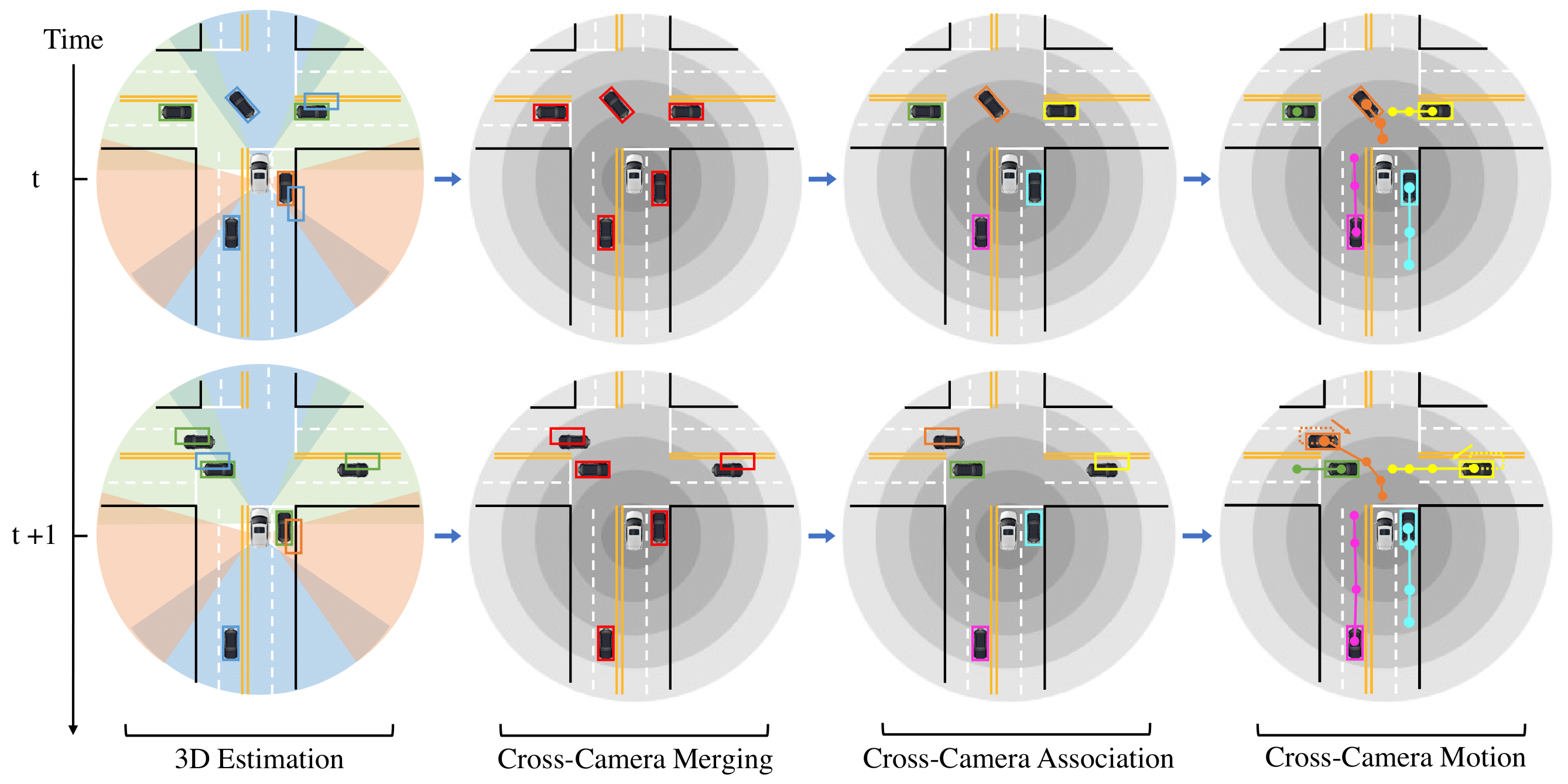

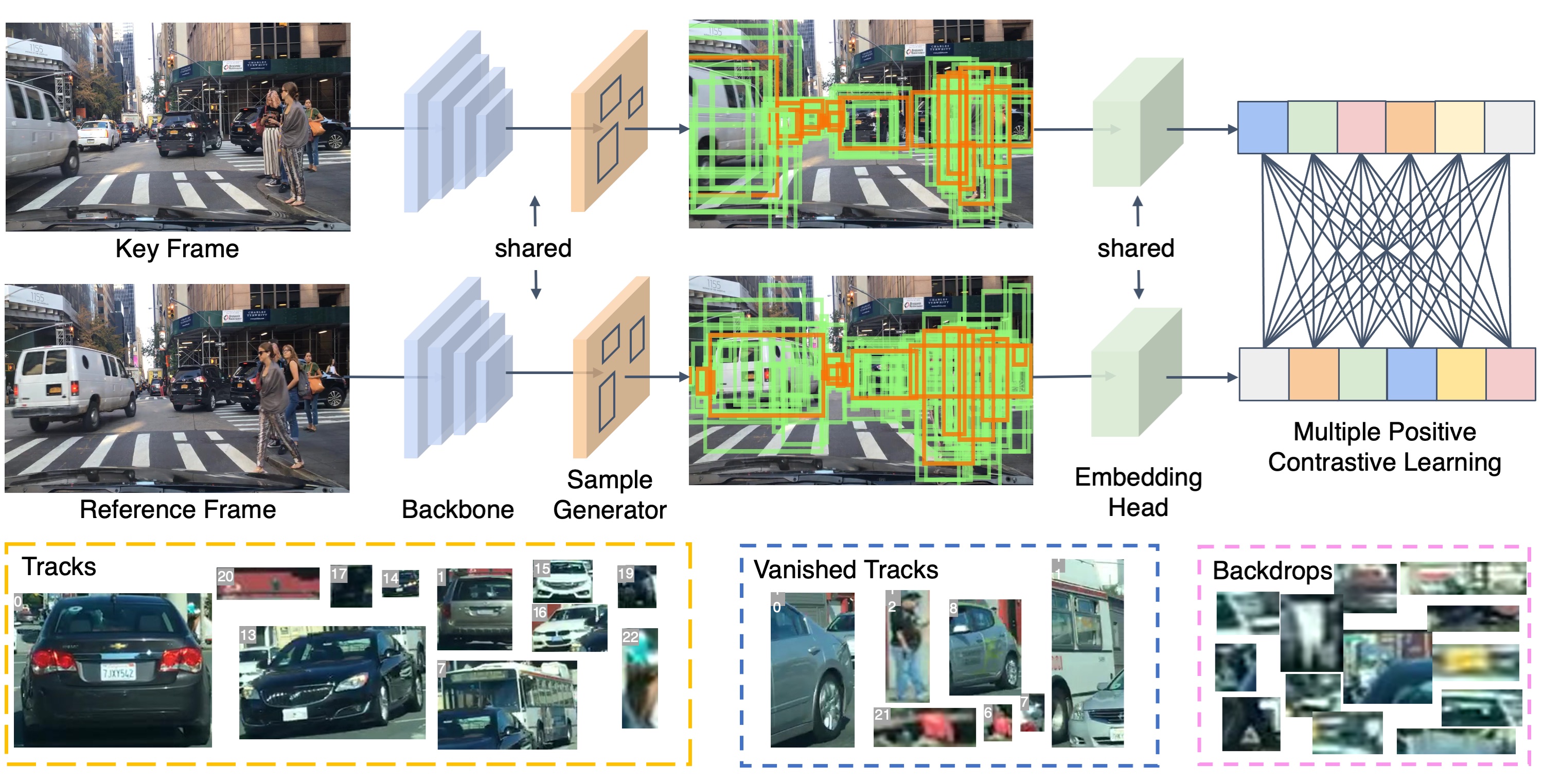

Current multi-category Multiple Object Tracking (MOT) metrics use class labels to group tracking results for per-class evaluation. Similarly, MOT methods typically only associate objects with the same class predictions. These two prevalent strategies in MOT implicitly assume that the classification performance is near-perfect. However, this is far from the case in recent large-scale MOT datasets, which contain large numbers of classes with many rare or semantically similar categories. Therefore, the resulting inaccurate classification leads to sub-optimal tracking and inadequate benchmarking of trackers. We address these issues by disentangling classification from tracking. We introduce a new metric, Track Every Thing Accuracy (TETA), breaking tracking measurement into three sub-factors: localization, association, and classification, allowing comprehensive benchmarking of tracking performance even under inaccurate classification. TETA also deals with the challenging incomplete annotation problem in large-scale tracking datasets. We further introduce a Track Every Thing tracker (TETer), that performs association using Class Exemplar Matching (CEM). Our experiments show that TETA evaluates trackers more comprehensively, and TETer achieves significant improvements on the challenging large-scale datasets BDD100K and TAO compared to the state-of-the-art.

Results

BDD100K val set

| Method | backbone | pretrain | mMOTA | mIDF1 | TETA | LocA | AssocA | ClsA |

|---|---|---|---|---|---|---|---|---|

| QDTrack(CVPR21) | ResNet-50 | ImageNet-1K | 36.6 | 51.6 | 47.8 | 45.9 | 48.5 | 49.2 |

| TETer (Ours) | ResNet-50 | ImageNet-1K | 39.1 | 53.3 | 50.8 | 47.2 | 52.9 | 52.4 |

BDD100K test set

| Method | backbone | pretrain | mMOTA | mIDF1 | TETA | LocA | AssocA | ClsA |

|---|---|---|---|---|---|---|---|---|

| QDTrack(CVPR21) | ResNet-50 | ImageNet-1K | 35.7 | 52.3 | 49.2 | 47.2 | 50.9 | 49.2 |

| TETer (Ours) | ResNet-50 | ImageNet-1K | 37.4 | 53.3 | 50.8 | 47.0 | 53.6 | 50.7 |

TAO val set

| Method | backbone | pretrain | TETA | LocA | AssocA | ClsA |

|---|---|---|---|---|---|---|

| QDTrack(CVPR21) | ResNet-101 | ImageNet-1K | 30.0 | 50.5 | 27.4 | 12.1 |

| TETer (Ours) | ResNet-101 | ImageNet-1K | 33.3 | 51.6 | 35.0 | 13.2 |

| TETer-HTC (Ours) | ResNeXt-101-64x4d | ImageNet-1K | 36.9 | 57.5 | 37.5 | 15.7 |

| TETer-SwinT (Ours) | SwinT | ImageNet-1K | 34.6 | 52.1 | 36.7 | 15.0 |

| TETer-SwinS (Ours) | SwinS | ImageNet-1K | 36.7 | 54.2 | 38.4 | 17.4 |

| TETer-SwinB (Ours) | SwinB | ImageNet-22K | 38.8 | 55.6 | 40.1 | 20.8 |

| TETer-SwinL (Ours) | SwinL | ImageNet-22K | 40.1 | 56.3 | 39.9 | 24.1 |

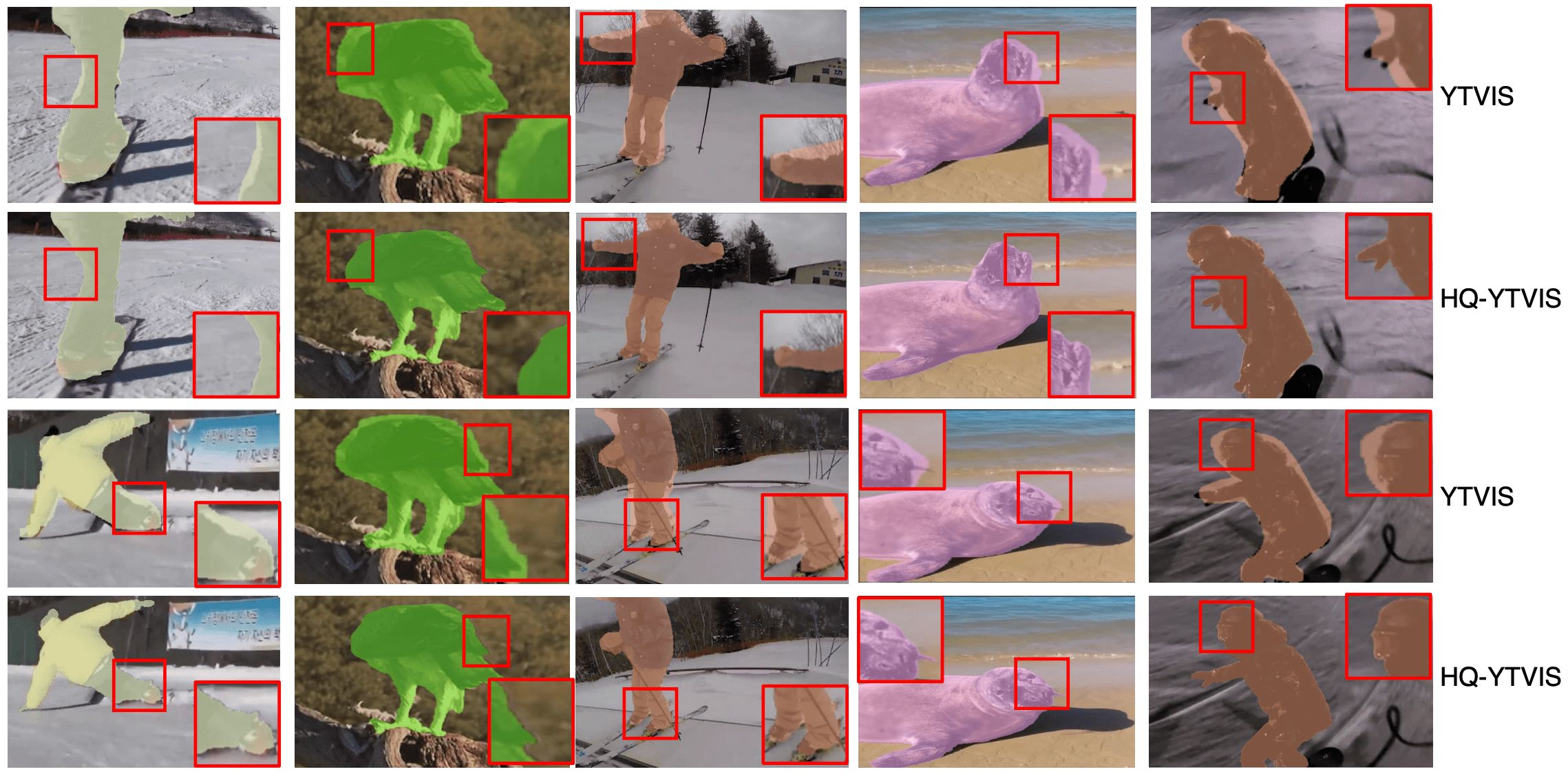

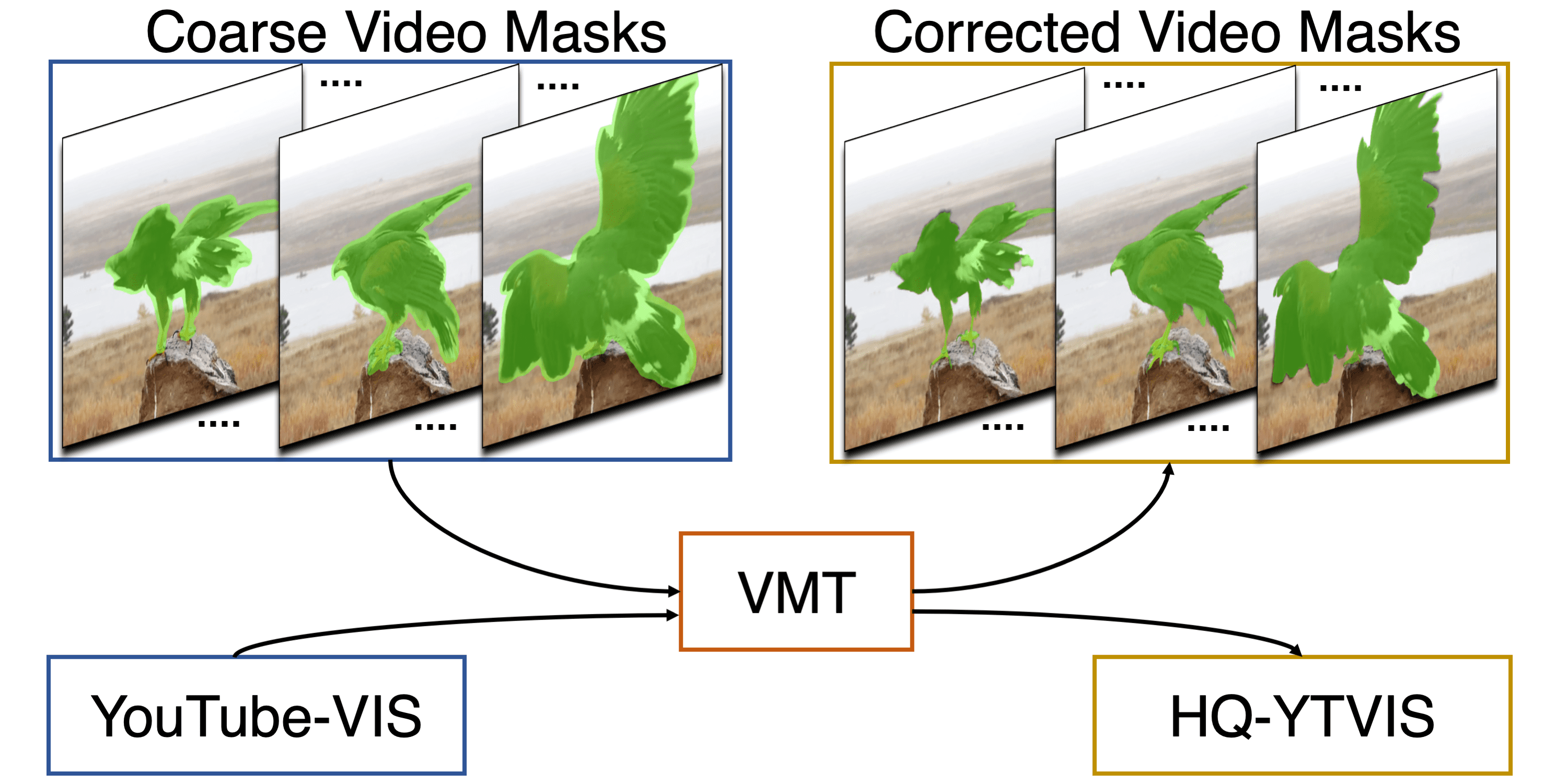

Visualization on BDD100K and TAO

Fun results on random youtube videos

Paper

| Siyuan Li, Martin Danelljan, Henghui Ding, Thomas E. Huang, Fisher Yu Tracking Every Thing in the Wild ECCV 2022 |

Code

github.com/SysCV/tet

Citation

@inproceedings{tet,

title = {Tracking Every Thing in the Wild},

author = {Li, Siyuan and Danelljan, Martin and Ding, Henghui and Huang, Thomas E. and Yu, Fisher},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2022}

}