Abstract

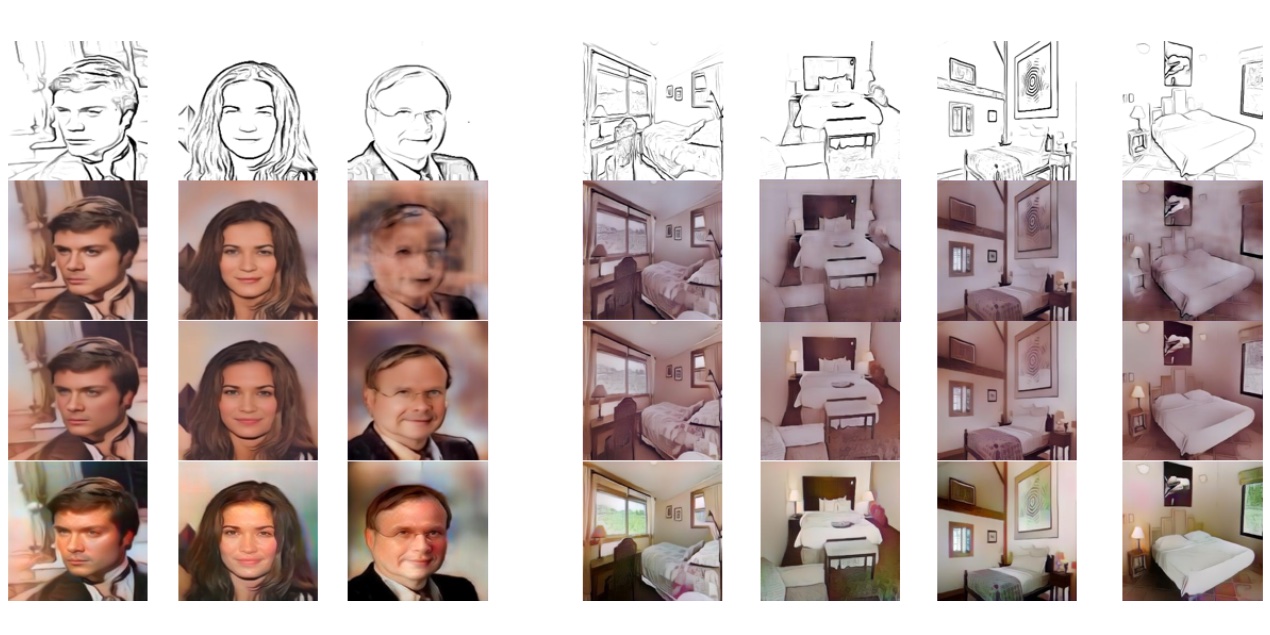

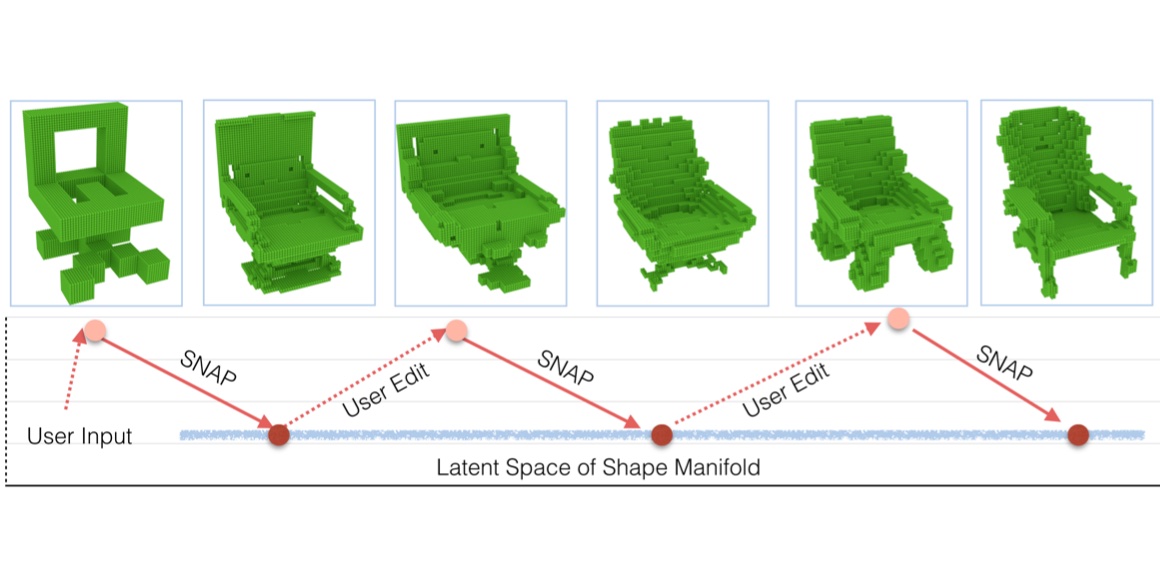

In this paper, we investigate deep image synthesis guided by sketch, color, and texture. Previous image synthesis methods can be controlled by sketch and color strokes but we are the first to examine texture control. We allow a user to place a texture patch on a sketch at arbitrary locations and scales to control the desired output texture. Our generative network learns to synthesize objects consistent with these texture suggestions. To achieve this, we develop a local texture loss in addition to adversarial and content loss to train the generative network. We conduct experiments using sketches generated from real images and textures sampled from a separate texture database and results show that our proposed algorithm is able to generate plausible images that are faithful to user controls. Ablation studies show that our proposed pipeline can generate more realistic images than adapting existing methods directly.

Paper

| Wenqi Xian, Patsorn Sangkloy, Varun Agrawal, Amit Raj, Jingwan Lu, Chen Fang, Fisher Yu, James Hays TextureGAN: Controlling Deep Image Synthesis with Texture Patches CVPR 2018 Spotlight |

Code

github.com/janesjanes/Pytorch-TextureGAN

Citation

@inproceedings{xian2018texturegan,

title={Texturegan: Controlling deep image synthesis with texture patches},

author={Xian, Wenqi and Sangkloy, Patsorn and Agrawal, Varun and Raj, Amit and Lu, Jingwan and Fang, Chen and Yu, Fisher and Hays, James},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={8456--8465},

year={2018}

}