Abstract

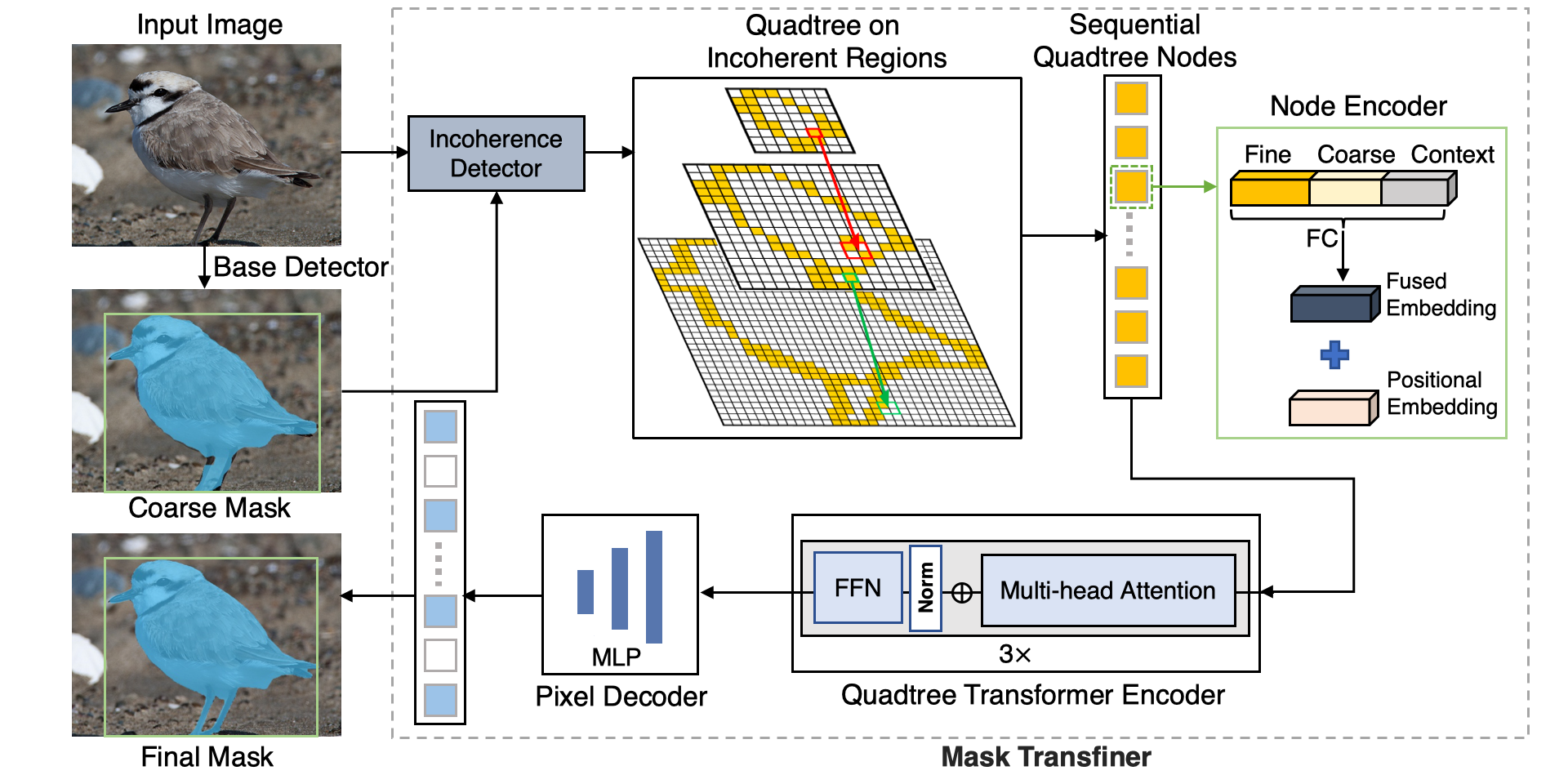

Two-stage and query-based instance segmentation methods have achieved remarkable results. However, their segmented masks are still very coarse. In this paper, we present Mask Transfiner for high-quality and efficient instance segmentation. Instead of operating on regular dense tensors, our Mask Transfiner decomposes and represents the image regions as a quadtree. Our transformer-based approach only processes detected error-prone tree nodes and self-corrects their errors in parallel. While these sparse pixels only constitute a small proportion of the total number, they are critical to the final mask quality. This allows Mask Transfiner to predict highly accurate instance masks, at a low computational cost. Extensive experiments demonstrate that Mask Transfiner outperforms current instance segmentation methods on three popular benchmarks, significantly improving both two-stage and query-based frameworks by a large margin of +3.0 mask AP on COCO and BDD100K, and +6.6 boundary AP on Cityscapes.

Video

Poster

Result visualization

With and without Mask Transfiner

Comparison with PointRend

Comparison with Mask RCNN on BDD100K

Try the Model!

You can try our model on Hugging Face at https://huggingface.co/spaces/lkeab/transfiner.

Paper

| Lei Ke, Martin Danelljan, Xia Li, Yu-Wing Tai, Chi-Keung Tang, Fisher Yu Mask Transfiner for High-Quality Instance Segmentation CVPR 2022 |

Code

github.com/SysCV/transfiner

Citation

@inproceedings{transfiner,

author = {Ke, Lei and Danelljan, Martin and Li, Xia and Tai, Yu-Wing and Tang, Chi-Keung and Yu, Fisher},

title = {Mask Transfiner for High-Quality Instance Segmentation},

booktitle = {CVPR},

year = {2022}

}