News

- 2023/05/05. We are hosting challenges in The 1st Workshop on Visual Continual Learning at ICCV 2023.

The challenges include multitask robustness and domain-continuous learning on SHIFT Dataset. Join and win the prizes!

- 2023/04/12. The continuous shifts data are updated to include optical flows and detailed environmental parameters.

- 2023/03/23. We updated all 10fps segmentation masks into the zip format, so you could access them without video decompression.

- 2022/09/16. LiDAR point clouds and their annotations are now available in “center” view.

- 2022/09/14. Depth maps at 10 fps (videos) for all views are now provided for download.

- 2022/09/09. Sequence information files are added for easier sequence filtering.

- 2022/07/06. We released the data for continuous domain shifts.

- 2022/06/10. We released the data for discrete domain shifts.

- 2022/03/01. The SHIFT Dataset paper has been accepted at CVPR 2022! Check out our talk and poster.

Extended talk

What does SHIFT provide?

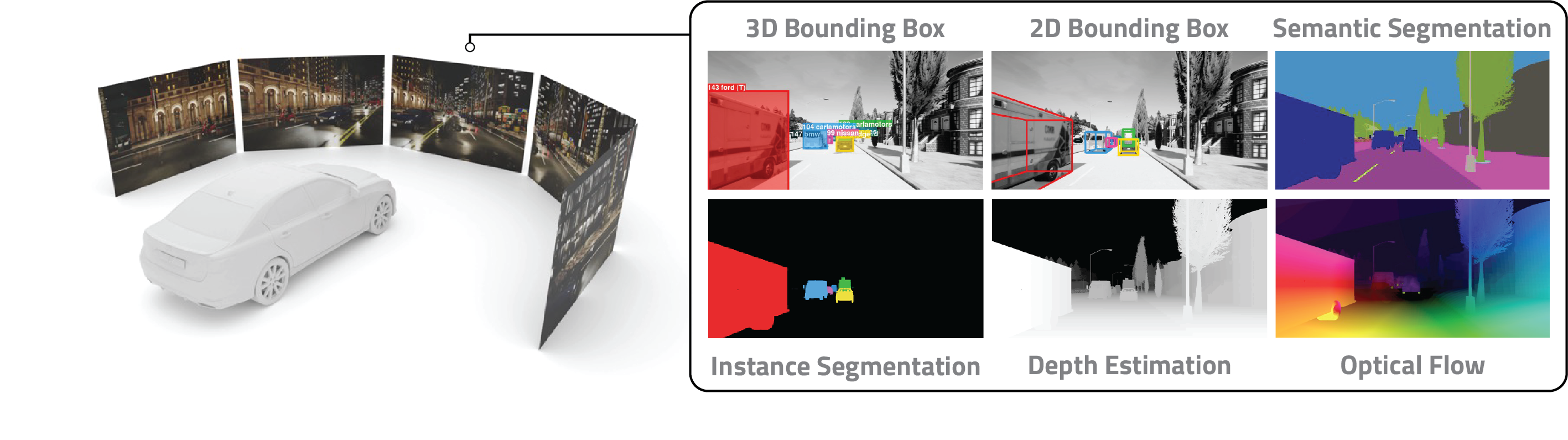

Featuring a comprehensive sensor suite and annotations, SHIFT supports a range of mainstream perception tasks. Our sensor suite features:

- Multi-View RGB camera set: Front, Front Left, Left / Right 45°, Left / Right 90°.

- Stereo RGB cameras: Pair of RGB cameras with a 50 cm horizontal gap.

- Depth camera: Dense depth camera with a depth resolution of 1 mm.

- Optical flow camera: Dense optical flow annotations.

- GNSS / IMU sensors.

Overall, SHIFT supports supports 12 mainstream perception tasks for autonomous driving:

Overall, SHIFT supports supports 12 mainstream perception tasks for autonomous driving:

| 2D object detection | 3D object detection | Semantic segmentation | Instance segmentation | 2D object tracking | 3D object tracking |

|---|---|---|---|---|---|

| Depth estimation | Optical flow estimation | Scene Classification | Pose estimation | Trajectory forecasting | Point cloud registration |

Why using SHIFT?

Adapting to a continuously evolving environment is a safety-critical challenge inevitably faced by all autonomous-driving systems. However, existing image- and video-based driving datasets fall short of capturing the mutable nature of the real world. Our dataset, SHIFT, is committed to overcoming these limitations.

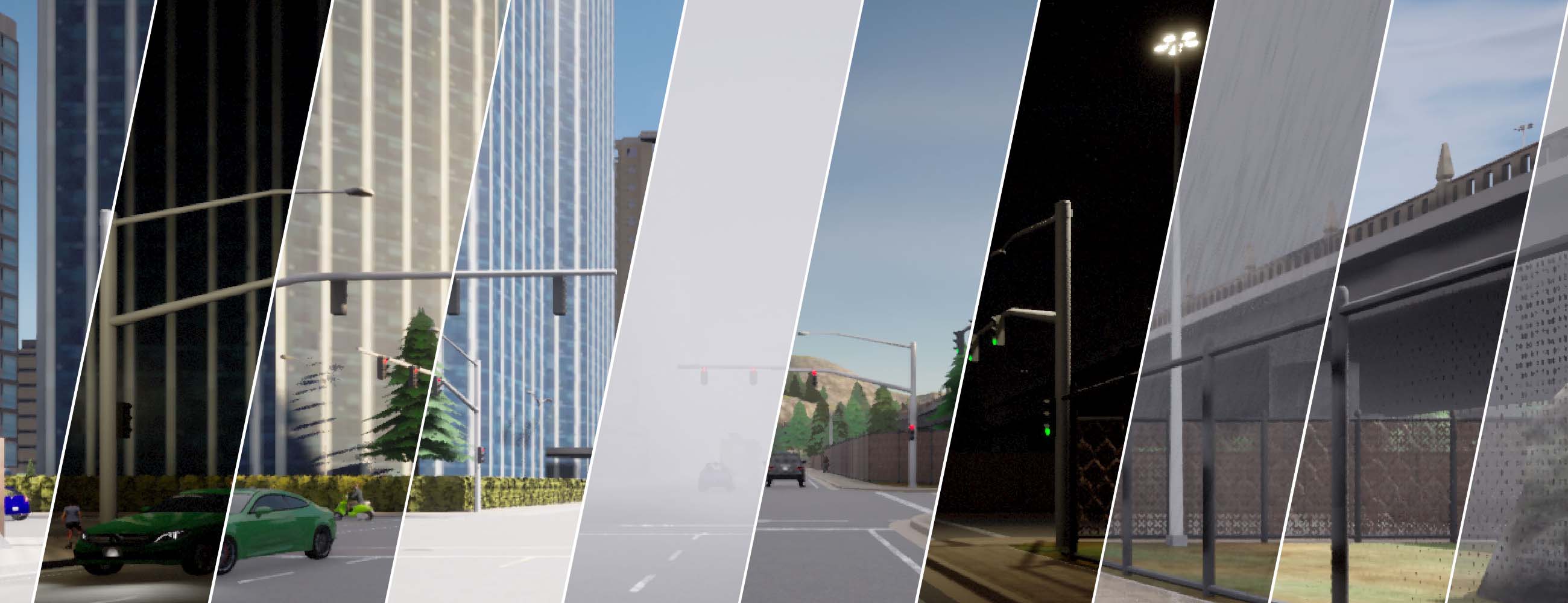

Extensive discrete domain shifts

SHIFT considers the most-frequent real-world environmental changes and provides 24 different types of discrete domain conditions grouped into 4 main categories:

- Weather conditions (including cloudiness, rain, and fog intensity)

- Time of day

- Vehicles and pedestrians density

- Camera orientation

First driving dataset with realistic continuous domain shifts

For nearly all real-world datasets the maximum sequence length is less than 100 seconds. Given their short length, these sequences are captured under approximately stationary conditions. By collecting video sequences under continuously shifting environmental conditions, we provide the first driving dataset allowing research on continuous test-time learning and adaptation.

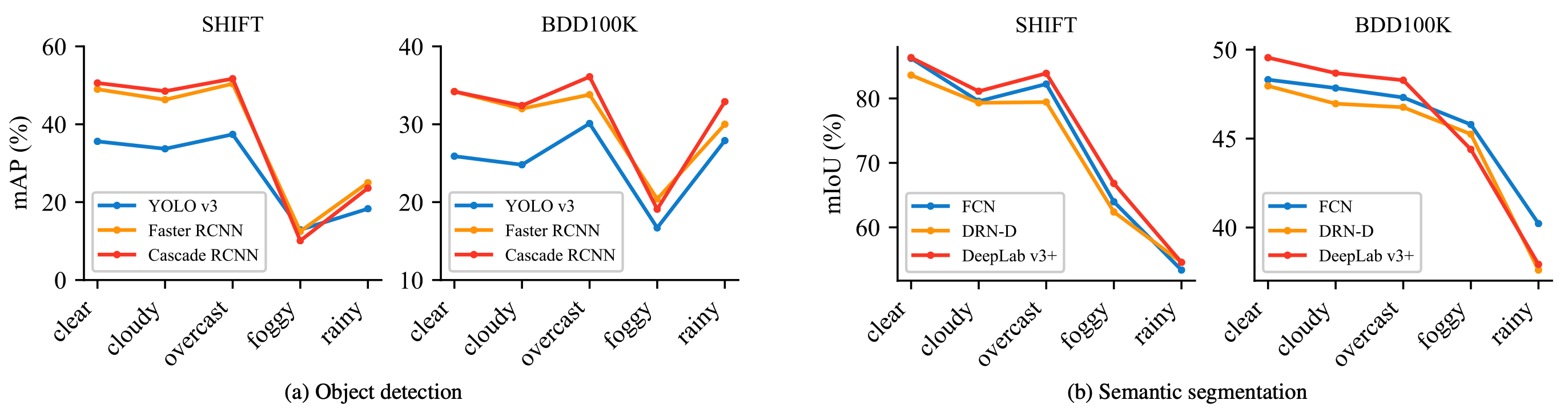

Verified consistency with real-world trends

We observe that the trends of performance drops witnessed in our simulation dataset are compatible with real-world observations in the real-world dataset BDD100K under discrete domain shifts. This confirms the real-world consistency of our dataset.

Use cases of SHIFT

About

Citation

The SHIFT Dataset is made freely available to academic and non-academic entities for research purposes such as academic research, teaching, scientific publications, or personal experimentation. If you use our dataset, we kindly ask you to cite our paper as:

@InProceedings{shift2022,

author = {Sun, Tao and Segu, Mattia and Postels, Janis and Wang, Yuxuan and Van Gool, Luc and Schiele, Bernt and Tombari, Federico and Yu, Fisher},

title = {{SHIFT:} A Synthetic Driving Dataset for Continuous Multi-Task Domain Adaptation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {21371-21382}

}

DevKit

Please check out our DevKit repo at github.com/SysCV/shift-dev. Feel free to leave any questions on Issues and post comments on Discussions.

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.